MIT-6.175 简介/目录

什么是 6.175?

6.175 通过实现不同版本的带缓存、分支预测和虚拟内存的流水线机器,教授计算机架构的基本原理。强调编写和评估可以模拟和合成到真实硬件或在 FPGA 上运行的架构描述。使用和设计测试台。本课程适合想要将计算机科学技术应用于复杂硬件设计的学生。

课题包括组合电路(包括加法器和乘法器)、多周期和流水线功能单元、RISC 指令集架构 (ISA)、非流水线和多周期处理器架构、2 至 10 阶段顺序流水线架构、带缓存和层次内存系统的处理器、TLB 和页面错误、I/O 中断。

讲师

- Arvind

- Quan Nguyen (助教)

讲座

周一周三周五 下午 3:00, 34-302。

实验课程目录

- MIT-6.175 简介/目录

- 实验 0: 入门

- 实验 1: 多路选择器和加法器

- 实验 2: 乘法器

- 实验 3: 快速傅里叶变换管道

- 实验 4: N 元 FIFOs

- 实验 5: RISC-V 引介 - 多周期与两阶段流水线

- 实验 6: 具有六阶段流水线和分支预测的 RISC-V 处理器

- 实验 7: 带有 DRAM 和缓存的 RISC-V 处理器

- 实验 8: 具有异常处理的 RISC-V 处理器

项目

日程安排

| 周数 | 日期 | 描述 | 下载链接 |

|---|---|---|---|

| 1 | 周三, 9月7日 | 讲座 1: 介绍 | [pptx] [pdf] |

| 周五, 9月9日 | 讲座 2: 组合电路 实验 0 发布, 实验 1 发布 | [pptx] [pdf] | |

| 2 | 周一, 9月12日 | 讲座 3: 组合电路 2 | [pptx] [pdf] |

| 周三, 9月14 | 讲座 4: 时序电路 | [pptx] [pdf] | |

| 周五, 9月16 | 讲座 5: 时序电路 2 实验 1 截止, 实验 2 发布 | [pptx] [pdf] | |

| 3 | 周一, 9月19日 | 讲座 6: 组合电路的流水线化 | [pptx] [pdf] |

| 周三, 9月21日 | 讲座 7: 基本良好的 BSV 程序 短暂历史寄存器 | [pptx] [pdf] | |

| 周五, 9月23日 | 无课: 学生假日 (秋季招聘会) 实验 3 发布 | ||

| 4 | 周一, 9月26日 | 讲座 8: 多规则系统与规则的并发执行 实验 2 截止 | [pptx] [pdf] |

| 周三, 9月28日 | 讲座 9: 保护条件 | [pptx] [pdf] | |

| 周五, 9月30日 | 辅导课 1: Bluespec | [pptx] [pdf] | |

| 5 | 周一, 10月3 | 讲座 10: 非流水线处理器 实验 4 发布 | [pptx] [pdf] |

| 周三, 10月5日 | 讲座 11: 非流水线和流水线处理器 实验 3 截止 | [pptx] [pdf] | |

| 周五, 10月7日 | 辅导课 2: 高级 Bluespec | [pptx] [pdf] | |

| 6 | 周一, 10月10日 | 无课: 原住民日 / 哥伦布日 | |

| 周二, 10月11日 | 实验 5 发布 | ||

| 周三, 10月12日 | 讲座 12: 控制冒险 实验 4 截止 | [pptx] [pdf] | |

| 周五, 10月14 | 辅导课 3: RISC-V 处理器 RISC-V 和调试 | [pptx] [pdf] | |

| 7 | 周一, 10月17日 | 讲座 13: 数据冒险 | [pptx] [pdf] |

| 周三, 10月19日 | 讲座 14: 多阶段流水线 实验 6 发布 | [pptx] [pdf] | |

| 周五, 10月21日 | 辅导课 4: 调试时代和记分板 实验 5 截止 | [pptx] [pdf] | |

| 8 | 周一, 10月24日 | 讲座 15: 分支预测 实验 5 截止 | [pptx] [pdf] |

| 周三, 10月26日 | 讲座 16: 分支预测 2 | [pptx] [pdf] | |

| 周五, 10月28日 | 辅导课 5: 时代和分支预测器 时代、调试和缓存 | [pptx] [pdf] | |

| 9 | 周一, 10月31日 | 讲座 17: 缓存 | [pptx] [pdf] |

| 周三, 11月2日 | 讲座 18: 缓存 2 实验 7 发布 | [pptx] [pdf] | |

| 周五, 11月4日 | 辅导课 6: 缓存和异常 实验 6 截止 | [pptx] [pdf] | |

| 10 | 周一, 11月7日 | 讲座 19: 异常 实验 6 截止 | [pptx] [pdf] |

| 周三, 11月9日 | 讲座 20: 虚拟内存 | [pptx] [pdf] | |

| 周五, 11月11日 | 无课: 退伍军人节 | ||

| 11 | 周一, 11月14日 | 讲座 21: 虚拟内存和异常 实验 8 发布 | [pptx] [pdf] |

| 周三, 11月16日 | 讲座 22: 缓存一致性 实验 7 截止 | [pptx] [pdf] | |

| 周四, 11月17日 | 实验 8 发布 | ||

| 周五, 11月18日 | 辅导课 7: 项目概述 实验 7 截止, 项目第一部分 发布 | [pptx] [pdf] | |

| 12 | 周一, 11月21日 | 讲座 23: 顺序一致性 | [pptx] [pdf] |

| 周三, 11月23日 | 辅导课 8: 项目第二部分: 一致性 取消: (提前) 感恩节 实验 8 截止 | ||

| 周五, 11月25日 | 无课: 感恩节 实验 8 截止 | ||

| 13 | 周一, 11月28日 | 无课: 从事项目 项目第二部分发布 | |

| 周三, 11月30日 | 无课: 从事项目 | ||

| 周四, 12月1日 | 项目第二部分 发布 | ||

| 周五, 12月2日 | 无课: 从事项目 辅导课 8: 项目第二部分: 一致性 | [pptx] [pdf] | |

| 14 | 周一, 12月5日 | 无课: 从事项目 | |

| 周三, 12月7日 | 无课: 从事项目 | ||

| 周五, 12月9日 | 无课: 从事项目 | ||

| 15 | 周一, 12月12日 | 无课: 从事项目 | |

| 周三, 12月14日 | 课程最后一天 项目展示 |

© 2016 麻省理工学院 版权所有。

实验 0: 入门

在本课程中,你将使用共享机器来完成实验。这些机器包括从 vlsifarm-03.mit.edu 到 vlsifarm-08.mit.edu。你可以通过使用你的Athena用户名和密码通过ssh登录这些机器。

本文档将指导你完成实验所需的一些操作,如获取每个实验的初始代码。首先使用ssh客户端登录上述任一服务器。

设置工具链

执行以下命令来设置你的环境并访问工具链:

add 6.175

source /mit/6.175/setup.sh

第一个命令使你能够访问课程锁定目录 /mit/6.175,并且每台电脑只需运行一次。第二个命令配置你当前的环境以包括实验所需的工具,每次登录工作时都需要运行。

使用Git获取和提交实验代码

参考设计提供在Git仓库中。你可以使用以下命令将它们克隆到你的工作目录中(用实验编号替换 labN,例如 lab1 和 lab2):

git clone $GITROOT/labN.git

注意:如果 "git clone" 失败,可能是因为我们没有你的Athena用户名。请给我发送电子邮件(至 qmn mit),我将为你创建一个远程仓库。

此命令在你当前目录中创建一个 labN 目录。$GITROOT 环境变量是唯一的,因此这个仓库将是你个人的仓库。在该目录中,可以使用实验讲义中指定的指令运行测试台。

讨论问题应该在提供的代码中的 discussion.txt 文件中回答。

如果你想添加任何新文件,除了助教提供的文件外,你需要使用以下命令添加新文件(在这个例子中,是 git 中的 newFile):

git add newFile

你可以在达到一个里程碑时本地提交你的代码:

git commit -am "Hit milestone"

通过添加任何必要的文件然后使用以下命令提交你的代码:

git commit -am "Finished lab"

git push

如有必要,你可以在截止日期前多次提交。

编写实验的Bluespec SystemVerilog(BSV)代码

在 vlsifarm-0x 上

如果你还不熟悉Linux命令行环境,6.175将是一个很好的学习机会。测试你的BSV代码,你需要在Linux环境下运行bsc,即BSV编译器。在同一台机器上编写BSV代码是有意义的。

虽然你可以使用许多文本编辑器,但只有Vim和Emacs为Bluespec提供了BSV语法高亮。Vim语法高亮文件可以通过运行以下命令安装:

/mit/6.175/vim_copy_BSV_syntax.sh

Emacs语法高亮文件可以在课程资源页面找到。你的助教曾经使用Emacs,但后来转用了Vim。他无法声称知道如何安装高亮模式文件,甚至是否有效。如果你是Emacs用户并愿意就此事贡献文档,请发邮件给课程工作人员。

在 Athena 集群上

你在 vlsifarm 机器上的家目录与任何 Athena 机器上的家目录相同。因此,你可以在Athena机器上使用gedit或其他图形文本编辑器编写代码,然后登录到vlsifarm机器上运行它。

在你自己的机器上

你也可以使用文件传输程序在

你的Athena家目录和你自己的机器之间移动文件。MIT在网上提供了关于安全传输文件的帮助,网址为 http://ist.mit.edu/software/filetransfer。

在其他机器上编译BSV

BSV也可以在非vlsifarm机器上编译。这在实验截止日期临近时vlsifarm机器繁忙时可能很有用。

在 Athena 集群上

用于vlsifarm机器的指令也适用于基于Linux的Athena机器。只需打开一个终端,像在vlsifarm机器上一样运行命令即可。

在你自己的基于Linux的机器上

要在你自己的基于Linux的机器上运行6.175实验,你需要在计算机上安装以下软件:

- OpenAFS以访问课程锁定目录

- Git以访问和提交实验

- GMP (libgmp.so.3)以运行BSV编译器

- Python以运行构建脚本

旁注:类似的设置也可能适用于Mac OS X / macOS。如果你让这样的设置工作,请向助教提供详细信息。

OpenAFS

在你的本地机器上安装OpenAFS将使你能够访问包含所有课程锁定目录的目录 /afs/athena.mit.edu。你将需要在根目录中创建一个名为/mit的文件夹,并在其中使用符号链接指向必要的课程锁定目录。

CSAIL TIG有一些关于如何为Ubuntu安装OpenAFS的信息,网址为 http://tig.csail.mit.edu/wiki/TIG/OpenAFSOnUbuntuLinux。这些指令用于访问 /afs/csail.mit.edu,但你需要访问 /afs/athena.mit.edu 来进行实验,所以无论何时看到csail都用athena替换。当你在你的机器上安装OpenAFS时,它会给你一个包含许多域的 /afs 文件夹。此网站还包含了使用你的用户名和密码登录以获取需要身份验证的文件的指令。你需要每天工作或每次重置计算机时都执行此操作,以便访问6.175课程锁定目录。

接下来你需要在根目录创建一个名为mit的文件夹,并在其中填充指向课程仓库的符号链接。在Ubuntu和类似的分发版上,命令如下:

cd /

sudo mkdir mit

cd mit

sudo ln -s /afs/athena.mit.edu/course/6/6.175 6.175

现在你可以在 /mit/6.175 文件夹中访问课程锁定目录了。

Git

在Ubuntu和类似的分发版上,你可以用以下命令安装Git:

sudo apt-get install git

GMP (libgmp.so.3)

BSV编译器使用libgmp来处理无界整数。在Ubuntu和类似的分发版上安装它,使用命令:

sudo apt-get install libgmp3-dev

如果你的机器上安装了libgmp,但你没有libgmp.so.3,你可以创建一个名为libgmp.so.3的符号链接,指向不同版本的libgmp。

Python

在Ubuntu和类似的分发版上,你可以使用以下命令安装Python:

sudo apt-get install python

在你基于Linux的机器上设置工具链

原始的 setup.sh 脚本在你的机器上不会工作,所以你将需要使用

source /mit/6.175/local_setup.sh

来设置工具链。完成这些后,你应该能够像在你自己的机器上

一样正常使用这些工具。

© 2016 麻省理工学院。版权所有。

实验 1: 多路选择器和加法器

实验 1 截止日期: 2016年9月16日,星期五,晚上11:59:59 EDT。

实验 1 的交付物包括:

- 在

Multiplexer.bsv和Adders.bsv中对练习 1-5 的回答,- 在

discussion.txt中对讨论问题的回答。

引言

在本实验中,你将从基本门原语构建多路选择器和加法器。首先,你将使用与门、或门和非门构建一个 1 位多路选择器。接下来,你将使用 for 循环编写一个多态性多路选择器。然后,你将转向加法器的工作,使用全加器构建一个 4 位加法器。最后,你将修改一个 8 位行波进位加法器,将其改为选择进位加法器。

本实验用作简单组合电路和 Bluespec SystemVerilog (BSV) 的引入。尽管 BSV 包含用于创建电路的高级功能,本实验将侧重于使用低级门来创建用于高级电路的块,例如加法器。这强调了 BSV 编译器生成的硬件。

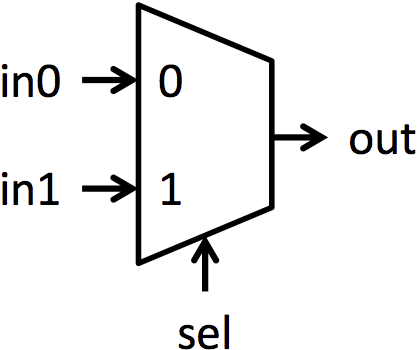

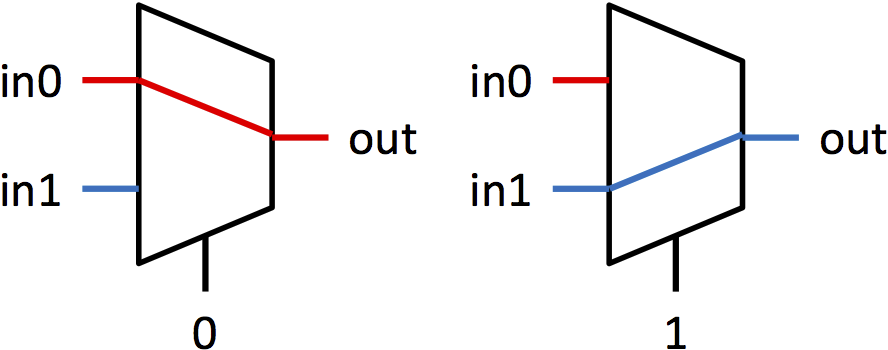

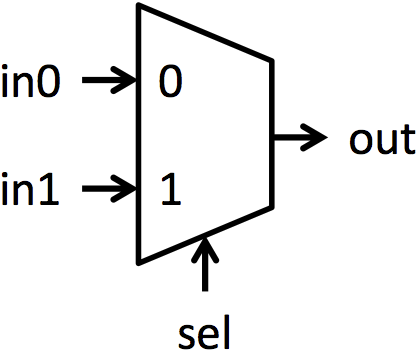

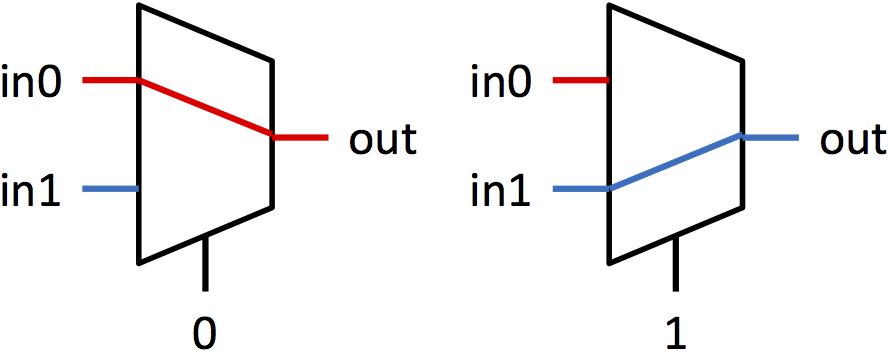

多路选择器

多路选择器(简称 muxes)是在多个信号之间选择的块。多路选择器有多个数据输入 inN,一个选择输入 sel,和一个单一输出 out。sel 的值决定哪个输入显示在输出上。本实验中的多路选择器都是 2 路多路选择器。这意味着将有两个输入可以选择(in0 和 in1),并且 sel 是一个单一位。如果 sel 为 0,则 out = in0;如果 sel 为 1,则 out = in1。图 1a 显示了用于多路选择器的符号,图 1b 以图形方式显示了多路选择器的功能。

|  |

|---|---|

| (a) 多路选择器符号 | (b) 多路选择器功能 |

图 1:1 位多路选择器的符号和功能

加法器

加法器是数字系统的基本构建块。有许多不同的加法器架构都可以计算相同的结果,但它们以不同的方式达到结果。不同的加法器架构在面积、速度和功耗方面也有所不同,并且没有一种架构在所有领域都超越其他加法器。因此,硬件设计师根据系统面积、速度和功耗约束选择加法器。

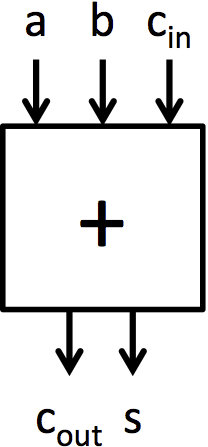

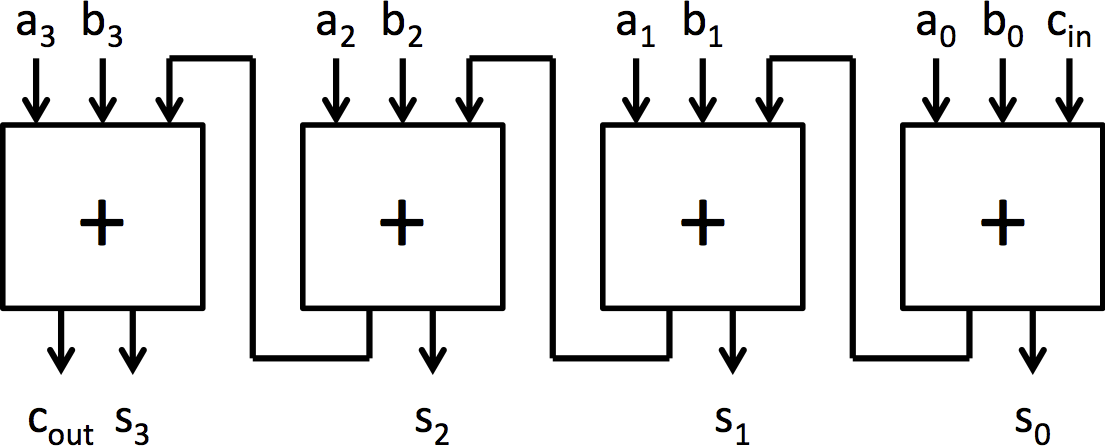

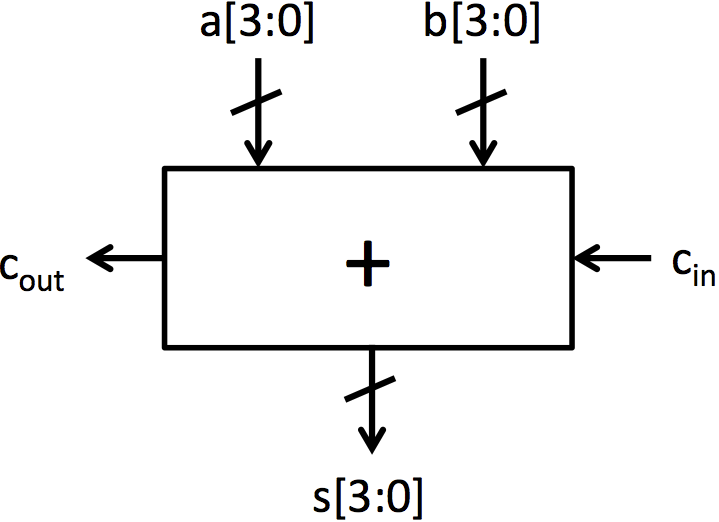

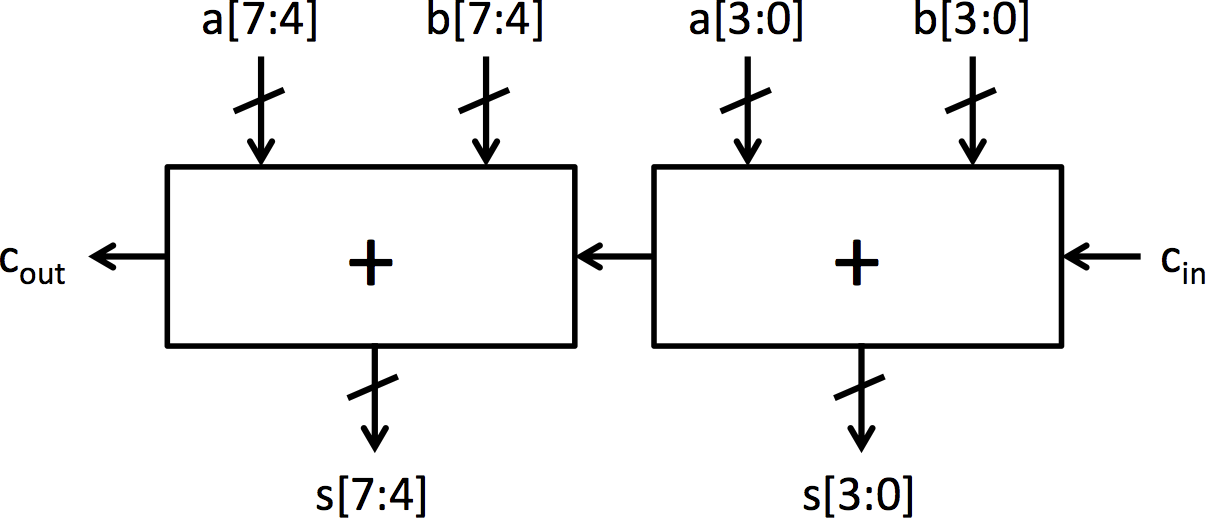

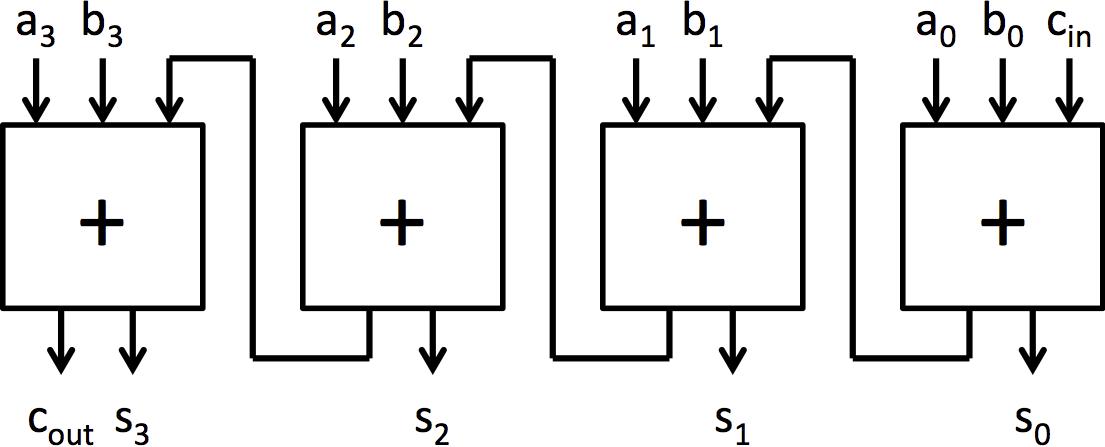

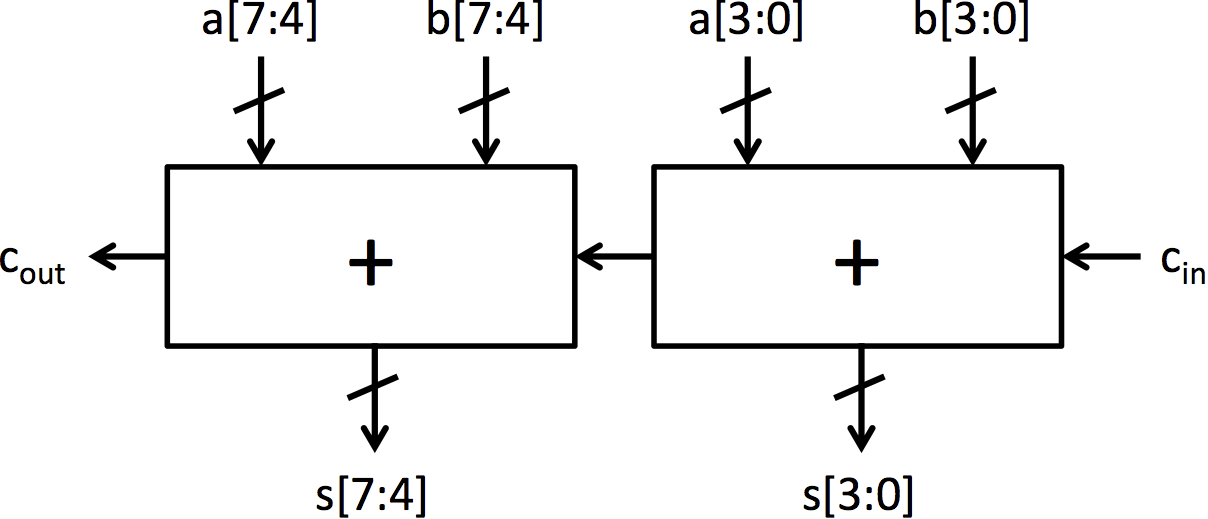

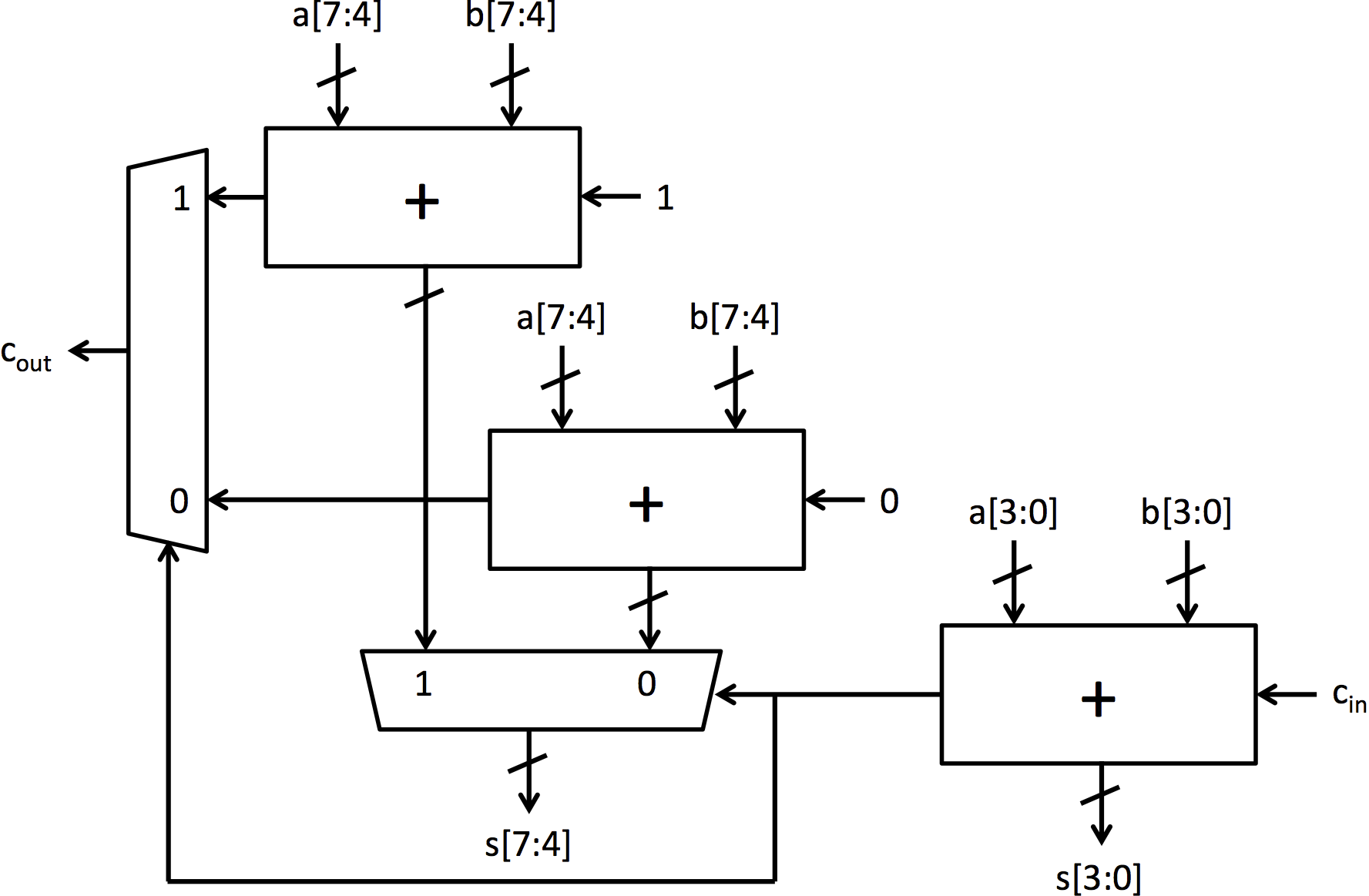

我们将探索的加法器架构是行波进位加法器和选择进位加法器。行波进位加法器是最简单的加法器架构。它由通过进位链连接的全加器块链组成。图 2b 中可以看到一个 4 位行波进位加法器。它非常小,但也非常慢,因为每个全加器必须等待前一个全加器完成后才能计算其位。

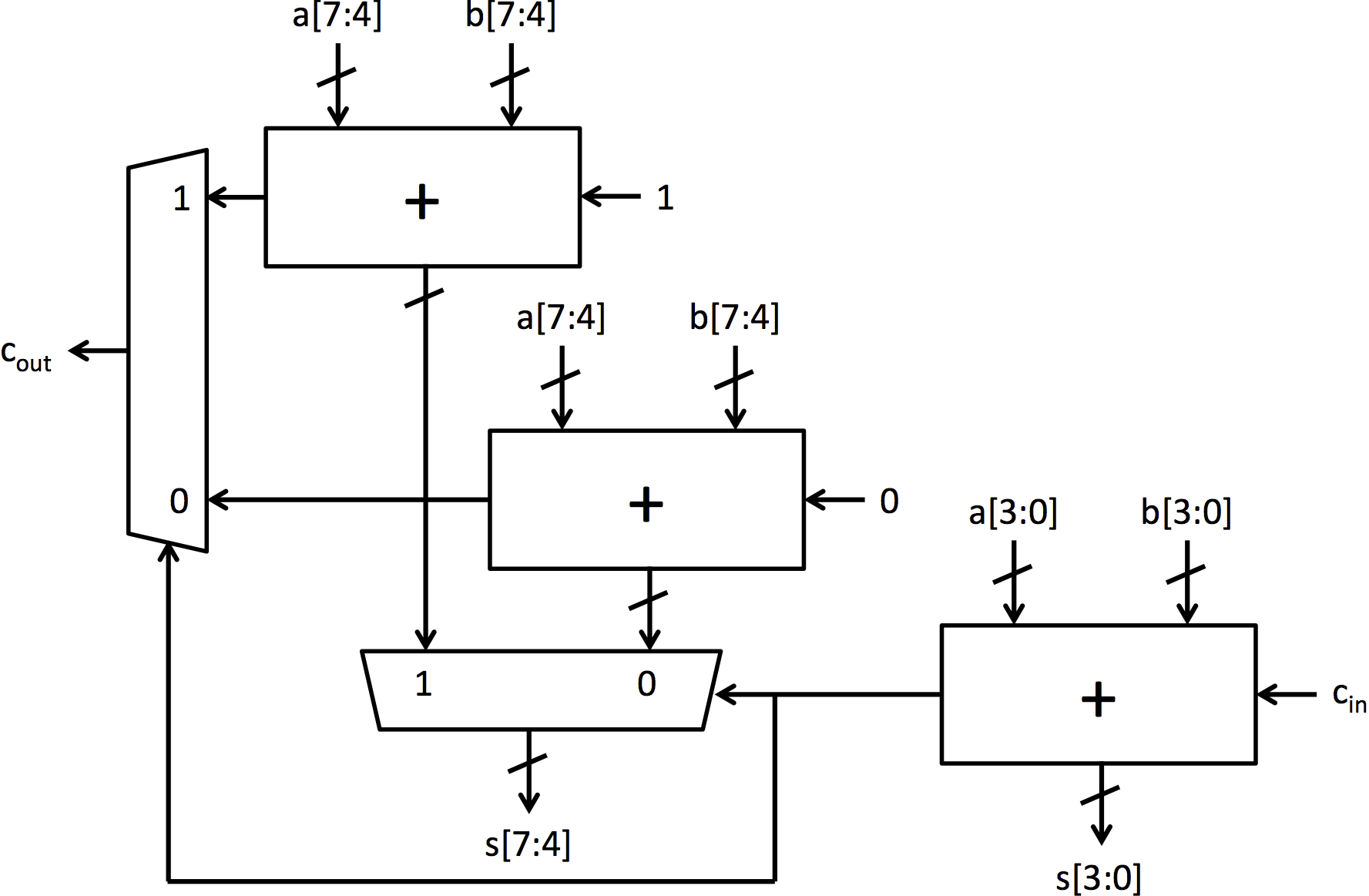

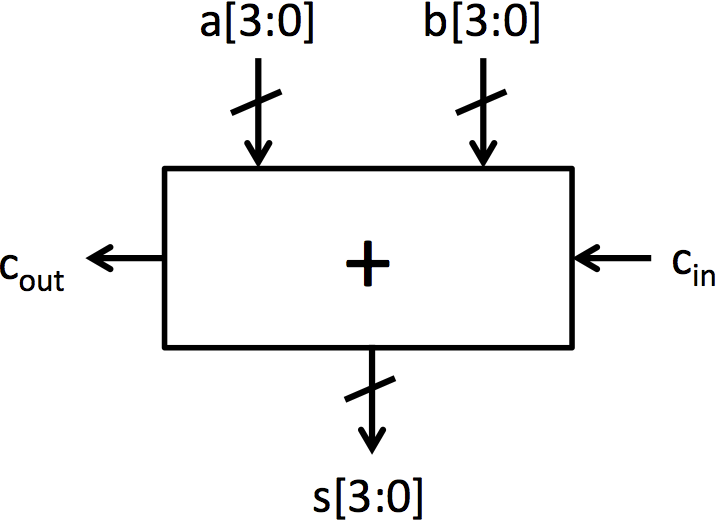

选择进位加法器为行波进位加法器添加了预测或推测以加快执行速度。它以与行波进位加法器相同的方式计算底部位,但计算顶部位的方式不同。它不等待来自下面位的进位信号计算,而是计算顶部位的两个可能结果:一个

假设下面的位没有进位,另一个假设有进位。一旦计算出进位位,多路选择器就会选择与进位位相对应的顶部位。图 3 中可以看到一个 8 位选择进位加法器。

|  |

|---|---|

| (a) 全加器 | (b) 由全加器构建的 4 位行波进位加法器 |

|  |

|---|---|

| (c) 4 位加法器符号 | (d) 8 位行波进位加法器 |

图 2:由全加器块构建的 4 位加法器和 8 位加法器

|

|---|

图 3:8 位选择进位加法器

测试台

已经编写了用于测试你的代码的测试台,测试台的链接包含在本实验的仓库中。文件 TestBench.bsv 包含多个可以使用提供的 Makefile 单独编译的测试台。Makefile 有每个模拟器可执行文件的目标,每个目标和可执行文件的使用在本手册中进行了解释。每个可执行文件在程序工作时打印出 PASSED,在程序遇到错误时打印出 FAILED。

以 Simple 结尾的测试台结构简化了,并且它们输出了来自单元测试期间单元的所有数据,以便你可以看到单元的工作情况。如果你有兴趣测试这些单元的自己的案例,你可以修改简单的测试台以输入你请求的值。普通测试台会为输入值生成随机数。

在 BSV 中构建多路选择器

构建我们的选择进位加法器的第一步是从门构建一个基本的多路选择器。让我们首先检查 Multiplexer.bsv。

function Bit#(1) multiplexer1(Bit#(1) sel, Bit#(1) a, Bit#(1) b);

return (sel == 0)? a: b;

endfunction

第一行开始定义一个名为 multiplexer1 的新函数。这个多路选择器函数接收几个参数,这些参数将用于定义多路选择器的行为。这个多路选择器操作单比特值,具体类型 Bit#(1)。稍后我们将学习如何实现多态函数,这些函数可以处理任何宽度的参数。

这个函数在其定义中使用了类似 C 的构造。如多路选择器这样简单的代码可以在高层次上定义,而不会带来实现上的惩罚。然而,由于硬件编译是一个复杂的多维问题,工具在它们可以执行的优化类型上是有限的。

return 语句构成了整个函数,它接收两个输入并使用 sel 选择其中之一。endfunction 关键字完成了我们多路选择器函数的定义。你应该能够编译该模块。

练习 1(4 分): 使用与门、或门和非门重新实现函数

multiplexer1 在 Multiplexer.bsv 中。需要多少个门?(所需的函数分别称为 and1、or1 和 not1,在 Multiplexers.bsv 中提供。)

静态展开

现实世界系统中的许多多路选择器都大于 1 位宽。我们需要大于单比特的多路选择器,但手动实例化 32 个单比特多路选择器以形成一个 32 位多路选择器将是乏味的。幸运的是,BSV 提供了强大的静态展开构造,我们可以使用它来简化编写代码的过程。静态展开是指 BSV 编译器在编译时评估表达式的过程,使用结果来生成硬件。静态展开可以用几行代码表达极其灵活的设计。

在 BSV 中,我们可以使用方括号 ([]) 索引更宽 Bit 类型中的单个位,例如 bitVector[1] 选择 bitVector 中的第二个最低有效位(bitVector[0] 选择最低有效位,因为 BSV 的索引从 0 开始)。我们可以使用 for 循环来复制许多具有相同形式的代码行。例如,要聚合 and1 函数形成一个 5 位 and 函数,我们可以写:

function Bit#(5) and5(Bit#(5) a, Bit#(5) b); Bit#(5) aggregate;

for(Integer i = 0; i < 5; i = i + 1) begin

aggregate[i] = and1(a[i], b[i]);

end

return aggregate;

endfunction

BSV 编译器在其静态展开阶段会用其完全展开的版本替换这个 for 循环。

aggregate[0] = and1(a[0], b[0]);

aggregate[1] = and1(a[1], b[1]);

aggregate[2] = and1(a[2], b[2]);

aggregate[3] = and1(a[3], b[3]);

aggregate[4] = and1(a[4], b[4]);

练习 2(1 分): 使用 for 循环和

multiplexer1完成函数multiplexer5在Multiplexer.bsv中的实现。 通过运行多路选择器测试台检查代码的正确性:$ make mux $ ./simMux可以使用另一个测试台来查看单元的输出:

$ make muxsimple $ ./simMuxSimple

多态性和高阶构造器

到目前为止,我们已经实现了两个版本的多路选择器函数,但可以想象需要一个 n 位多路选择器。如果我们不必完全重新实现多路选择器就能使用不同的宽度,那将是很好的。使用前一节中介绍的 for 循环,我们的多路选择器代码已经有些参数化,因为我们使用了常数大小和相同类型。我们可以通过使用 typedef 给多路选择器的大小起一个名字(N)来做得更好。我们的新多路选择器代码看起来像这样:

typedef 5 N;

function Bit#(N) multiplexerN(Bit#(1) sel, Bit#(N) a, Bit#(N) b);

// ...

// 从 multiplexer5 中的代码,用 N(或 valueOf(N))替换 5

// ...

endfunction

typedef 使我们能够随意更改多路选择器的大小。valueOf 函数在我们的代码中引入了一个小细节:N 不是一个 Integer 而是一个 数值类型,必须在用于表达式之前转换为 Integer。尽管有所改进,我们的实现仍然

缺乏一些灵活性。所有多路选择器的实例必须具有相同的类型,我们仍然必须为每次想要新的多路选择器时产生新代码。然而在 BSV 中,我们可以进一步参数化模块以允许不同的实例具有实例特定的参数。这种模块是多态的,硬件的实现会根据编译时配置自动改变。多态性是 BSV 设计空间探索的本质。

真正的多态多路选择器可以从以下开始:

// typedef 32 N; // 不需要

function Bit#(n) multiplexer n(Bit#(1) sel, Bit#(n) a, Bit#(n) b);

变量 n 代表多路选择器的宽度,替换了具体值 N(=32)。在 BSV 中,类型变量(n)以小写字母开头,而具体类型(N)以大写字母开头。

练习 3(2 分): 完成函数

multiplexer_n的定义。通过将原始定义的multiplexer5只更改为:return multiplexer_n(sel, a, b);来验证此函数的正确性。这种重新定义允许测试台在不修改的情况下测试你的新实现。

在 BSV 中构建加法器

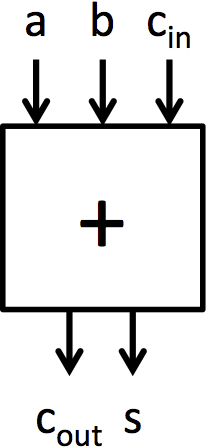

现在我们将转向构建加法器。加法的基本单元是全加器,如图 2a 所示。这个单元将两个输入位和一个进位输入位相加,它产生一个和位和一个进位输出位。Adders.bsv 包含两个函数定义,描述了全加器的行为。fa_add 计算全加器的加法输出,fa_carry 计算进位输出。这些函数包含与第 2 讲中呈现的全加器相同的逻辑。

可以通过将 4 个全加器连接在一起制作一个操作 4 位数的加法器,如图 2b 所示。这种加法器架构被称为行波进位加法器,因为进位链的结构。为了生成这种加法器而不写出每个显式的全加器,可以使用类似于 multiplexer5 的 for 循环。

练习 4(2 分): 使用 for 循环正确连接所有使用

fa_sum和fa_carry的代码来完成add4的代码。

通过连接 4 位加法器,可以构建更大的加法器,就像通过连接全加器构建 4 位加法器一样。Adders.bsv 包含两个使用 add4 和连接电路构建的加法器模块:mkRCAdder 和 mkCSAdder。注意,与此点到的其他加法器不同,这些加法器是作为模块而不是函数实现的。这是一个微妙但重要的区别。在 BSV 中,函数由编译器自动内联,而模块必须使用 '<-' 符号显式实例化。如果我们将 8 位加法器制作成一个函数,使用它的 BSV 代码的多个位置将实例化多个加法器。通过将其制作成一个模块,多个来源可以使用相同的 8 位加法器。

在模块 mkRCAdder 中包含了图 2d 所示的 8 位行波进位加法器的完整实现。可以通过运行以下命令进行测试:

make rca

./simRca

由于 mkRCAdder 是通过组合 add4 实例构建的,运行 ./simRCA 也将测试 add4。可以使用另一个测试台来查看单元的输出:

$ make rcasimple

$ ./simRca

Simple

还有一个 mkCSAdder 模块,旨在实现图 3 所示的选择进位加法器,但其实现未包含。

练习 5(5 分): 在模块

mkCSAdder中完成选择进位加法器的代码。使用图 3 作为所需硬件和连接的指南。可以通过运行以下命令测试此模块:$ make csa $ ./simCsa可以使用另一个测试台来查看单元的输出:

$ make csasimple $ ./simCsaSimple

讨论问题

在初始实验代码提供的文本文件

discussion.txt中写下对这些问题的回答。

- 你的一位多路选择器使用了多少个门?5 位多路选择器呢?写下 N 位多路选择器中门的数量的公式。(2 分)

- 假设一个全加器需要 5 个门。8 位行波进位加法器需要多少个门?8 位选择进位加法器需要多少个门?(2 分)

- 假设一个全加器需要 A 时间单位来计算其输出,一旦所有输入都有效,一个多路选择器需要 M 时间单位来计算其输出。用 A 和 M 表示,8 位行波进位加法器需要多长时间?8 位选择进位加法器需要多长时间?(2 分)

- 可选:你花了多长时间来完成这个实验?

完成后,使用 git add 添加任何必要的文件,使用 git commit -am "Final submission" 提交更改,并用 git push 推送修改以进行评分。

© 2016 麻省理工学院。版权所有。

实验 2: 乘法器

实验 2 截止日期: 2016年9月26日星期一,晚上11:59:59 EDT。 你的实验 2 交付物包括:

- 在

Multipliers.bsv和TestBench.bsv中对练习 1-9 的回答,- 在

discussion.txt中对讨论问题 1-5 的回答。

引言

在本实验中,你将构建不同的乘法器实现,并使用提供的测试台模板的自定义实例进行测试。首先,你将使用重复加法实现乘法器。接下来,你将使用折叠架构实现一个布斯乘法器。最后,你将通过实现一个基-4 布斯乘法器来构建一个更快的乘法器。

这些模块的输出将通过测试台与 BSV 的 * 操作符进行比较以验证功能。

本实验的所有材料都在 git 仓库 $GITROOT/lab2.git 中。本实验中提出的所有讨论问题都应该在 discussion.txt 中回答。完成实验后,将你的更改提交到仓库并推送更改。

内置乘法

BSV 具有内置的乘法操作:*。它是有符号或无符号乘法,具体取决于操作数的类型。对于 Bit#(n) 和 UInt#(n),* 操作符执行无符号乘法。对于 Int#(n),它执行有符号乘法。就像 + 操作符一样,* 操作符假设输入和输出都是同一类型。如果你想从 n 位操作数得到一个 2n 位结果,你必须首先将操作数扩展为 2n 位值。

Multipliers.bsv 包含了对 Bit#(n) 输入进行有符号和无符号乘法的函数。两个函数都返回 Bit#(TAdd#(n,n)) 输出。这些函数的代码如下所示:

注意:

pack和unpack是内置函数,分别用于转换为和从Bit#(n)转换。

function Bit#(TAdd#(n,n)) multiply_unsigned( Bit#(n) a, Bit#(n) b );

UInt#(n) a_uint = unpack(a);

UInt#(n) b_uint = unpack(b);

UInt#(TAdd#(n,n)) product_uint = zeroExtend(a_uint) * zeroExtend(b_uint);

return pack( product_uint );

endfunction

function Bit#(TAdd#(n,n)) multiply_signed( Bit#(n) a, Bit#(n) b );

Int#(n) a_int = unpack(a);

Int#(n) b_int = unpack(b);

Int#(TAdd#(n,n)) product_int = signExtend(a_int) * signExtend(b_int);

return pack( product_int );

endfunction

这些函数将作为你在本实验中的乘法器的功能基准进行比较。

测试台

本实验有两个参数化的测试台模板,可以很容易地用特定参数实例化,以测试两个乘法函数之间的对比,或测试一个乘法器模块与一个乘法器函数的对比。这些参数包括函数和模块接口。mkTbMulFunction 用相同的随机输入比较两个函数的输出,而 mkTbMulModule 比较测试模块(被测试设备或 DUT)和参考函数的输出。

以下代码展示了如何为特定的函数和/或模块实现测试台。

(* synthesize *)

module mkTbDumb();

function Bit#(16) test_function( Bit#(8) a, Bit#(8) b ) = multiply_unsigned( a, b );

Empty tb <- mkTbMulFunction

(test_function, multiply_unsigned, True);

return tb;

endmodule

(* synthesize *)

module mkTbFoldedMultiplier();

Multiplier#(8) dut <- mkFoldedMultiplier();

Empty tb <- mkTbMulModule(dut, multiply_signed, True);

return tb;

endmodule

下面两行使用 TestBenchTemplates.bsv 中的测试台模板实例化特定的测试台。

Empty tb <- mkTbMulFunction(test_function, multiply_unsigned, True);

Empty tb <- mkTbMulModule(dut, multiply_signed, True);

每个的第一个参数(test_function 和 dut)是要测试的函数或模块。第二个参数(multiply_unsigned 和 multiply_signed)是正确实现的参考函数。在这种情况下,参考函数是使用 BSV 的 * 操作符创建的。最后一个参数是一个布尔值,指示你是否希望输出详细信息。如果你只想让测试台打印 PASSED 或 FAILED,将最后一个参数设置为 False。

这些测试台(mkTbDumb 和 mkTbFoldedMultiplier)可以使用提供的 Makefile 轻松构建。要编译这些示例,你会为第一个写 make Dumb.tb,为第二个写 make FoldedMultiplier.tb。makefile 将产生可执行文件 simDumb 和 simFoldedMultiplier。要编译你自己的测试台 mkTb<name>,运行

make <name>.tb

./sim<name>

编译过程不会产生 .tb 文件,扩展名只是用来指示应使用哪个构建目标。

练习 1(2 分): 在

TestBench.bsv中编写一个测试台mkTbSignedVsUnsigned,测试multiply_signed是否产生与multiply_unsigned相同的输出。按上述描述编译此测试台并运行。 (即运行$ make SignedVsUnsigned.tb然后

$ ./simSignedVsUnsigned)

讨论问题 1(1 分): 在使用二的补码编码时,从硬件角度来看,无符号加法与有符号加法是相同的。根据测试台的证据,无符号乘法与有符号乘法是否相同?

讨论问题 2(2 分): 在

mkTBDumb中排除以下行function Bit#(16) test_function( Bit#(8) a, Bit#(8) b ) = multiply_unsigned( a, b );并修改其余的模块,以便拥有

(* synthesize *) module mkTbDumb(); Empty tb <- mkTbMulFunction(multiply_unsigned, multiply_unsigned, True); return tb; endmodule将导致编译错误。原始代码是如何修复编译错误的?你也可以通过定义两个函数来修复错误,如下所示。

(* synthesize *) module mkTbDumb(); function Bit#(16) test_function( Bit#(8) a, Bit#(8) b ) = multiply_unsigned( a, b ); function Bit#(16) ref_function( Bit#(8) a, Bit#(8) b ) = multiply_unsigned( a, b ); Empty tb <- mkTbMulFunction(test_function, ref_function, True); return tb; endmodule为什么不需要两个函数定义?(即为什么

mkTbMulFunction的第二个操作数可以有变量类型?)提示: 查看TestBenchTemplates.bsv中mkTbMulFunction的操作数类型。

通过重复加法实现乘法

作为组合函数

在 Multipliers.bsv 中,有一个用于计算乘法的函数框架代码,该函数通过重复加法来计算。由于这是一个函数,它必须代表一个组合电路。

练习 2(3 分): 填写

multiply_by_adding的代码,使其能够使用重复加法在一个时钟周期内计算 a 和 b 的乘积。(你将在练习 3 中验证你的乘法器的正确性。)如果你需要一个加法器从两个 n 位操作数产生一个 (n+1) 位输出,请按照multiply_unsigned和multiply_signed的模型,先将操作数扩展为 (n+1) 位再相加。

练习 3(1 分): 在

TestBench.bsv中填写测试台mkTbEx3来测试multiply_by_adding的功能。使用以下命令编译它:$ make Ex3.tb并使用以下命令运行它:

$ ./simEx3

讨论问题 3(1 分): 你实现的

multiply_by_adding是有符号乘法器还是无符号乘法器?(注意:如果它既不符合multiply_signed也不符合multiply_unsigned,那么它是错误的。)

作为顺序模块

使用重复加法乘以两个 32 位数需要三十一个 32 位加法器。这些加法器可能会根据你的目标和其余设计的限制占用大量面积。在讲座中,展示了重复加法乘法器的折叠版本,以减少乘法器所需的面积。折叠版本的乘法器使用顺序电路,通过每个时钟周期完成一个所需计算并将临时结果存储在寄存器中,来共享单个 32 位加法器。

在本实验中,我们将创建一个 n 位折叠乘法器。寄存器 i 将跟踪模块在计算结果中的进度。如果 0 <= i < n,则正在进行计算,规则 mul_step 应该在做工作并递增 i。有两种方法可以做到这一点。第一种方法是制作一个包含 if 语句的规则,如下所示:

rule mul_step;

if (i < fromInteger(valueOf(n))) begin

// 做一些事情

end

endrule

这个规则每个周期都运行,但只有当 i < n 时才做事情。第二种方法是制作一个带有 保护 的规则,如下所示:

rule mul_step(i < fromInteger(valueOf(n)));

// 做一些事情

endrule

这个规则不会每个周期都运行。相反,它只在其保护,i < fromInteger(valueOf(i)),为真时运行。虽然这在功能上没有区别,但在 BSV 语言的语义和编译器中有所不同。这种差异将在后续讲座中讨论,但在此之前,你应该在本实验的设计中使用保护。如果不这样做,你可能会遇到测试台因为运行超时而失败。

注意: BSV 编译器防止多个规则在同一周期内触发,如果它们可能写入同一个寄存器(有点类似...)。BSV 编译器将规则

mul_step视为每次触发时都写入i。测试台中有一个规

则用于向乘法器模块提供输入,因为它调用了 start 方法,所以它也每次触发时都写入 i。BSV 编译器看到这些冲突的规则,并发出编译器警告,它将把一个规则视为比另一个更紧急,永远不会同时触发它们。它通常选择 mul_step,由于该规则每个周期都触发,它阻止了测试台规则向模块提供输入。

当 i 达到 n 时,结果已准备好读取,因此 result_ready 应返回 true。当调用动作值方法 result 时,i 的状态应增加 1 至 n+1。i == n+1 表明模块准备重新开始,因此 start_ready 应返回 true。当调用动作方法 start 时,模块中所有寄存器的状态(包括 i)应设置为正确的值,以便重新开始计算。

练习 4(4 分): 填写模块

mkFoldedMultiplier的代码,以实现一个折叠的重复加法乘法器。你能在不使用变量位移位移器的情况下实现它吗?在不使用动态位选择的情况下实现它吗?(换句话说,你能避免通过存储在寄存器中的值进行位移或位选择吗?)

练习 5(1 分): 填写测试台

mkTbEx5以测试mkFoldedMultiplier的功能。如果你正确实现了mkFoldedMultiplier,它们应产生相同的输出。运行它们,使用:$ make Ex5.tb $ ./simEx5

布斯乘法算法

重复加法算法适用于乘以无符号输入,但它无法乘以使用二进制补码编码的(负)数字。为了乘以有符号数字,你需要一个不同的乘法算法。

布斯乘法算法是一种适用于有符号二进制补码数的算法。该算法使用一种特殊的编码对其中一个操作数进行编码,使其能够用于有符号数字。这种编码有时被称为布斯编码。布斯编码的数字有时用 +、- 和 0 符号表示,例如:0+-0b。这种编码的数字类似于二进制数字,因为数字中的每个位置都代表同样的二的幂。i 位上的 + 表示 (+1) · 2^i,但 i 位上的 - 对应 (-1) · 2^i。

可以通过查看原始数字的当前位和前一位(较不重要的位)来逐位获得二进制数字的布斯编码。在编码最低有效位时,假定前一位为零。下表显示了转换到布斯编码的对应关系。

| 当前位 | 前一位 | 布斯编码 |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | +1 |

| 1 | 0 | -1 |

| 1 | 1 | 0 |

布斯乘法算法最好描述为使用乘数的布斯编码的重复加法算法。不是在添加 0 或添加被乘数之间切换,如重复加法中所做的那样,布斯算法根据乘数的布斯编码在添加 0、添加被乘数或减去被乘数之间切换。下面的例子显示了被乘数 m 通过将乘数转换为其布斯编码来乘以一个负数。

| -5 · m | = 1011b · m |

|---|---|

= -+0-b · m | |

| = (-m) · 2^3 + m · 2^2 + (-m) · 2^0 | |

| = -8m + 4m - m | |

| = -5m |

布斯乘法算法可以使用以下算法有效地在硬件中实现。这个算法假设一个 n 位被乘数 m 正在被一个 n 位乘数 r 乘。

初始化:

// 所有位宽 2n+1

m_pos = {m, 0}

m_neg = {(-m), 0}

p = {0, r, 1'b0}

重复 n 次:

let pr = p 的最低两位

if ( pr == 2'b01 ): p = p + m_pos;

if ( pr == 2'b10 ): p = p + m_neg;

if ( pr == 2'b00 or pr == 2'b11 ): 无操作;

算术右移 p 一位;

res = p 的最高 2n 位;

符号 (-m) 是 m 的二进制补码的逆。由于最负数在二进制补码中没有正数对应,这个算法不适用于 m = 10...0b。因为这个限制,测试台已经修改,以避免在测试时使用最负数。

注意: 这不是设计硬件的好方法。永远不要因为硬件无法通过而从测试台中删除测试。解决这个问题的一种方法是实现一个 (n+1)-位的布斯

乘法器来执行 n 位有符号乘法,方法是对输入进行符号扩展。如果你使用零扩展而不是符号扩展输入,你可以得到两个输入的 n 位无符号乘积。如果你添加一个额外的输入到乘法器中,允许你在符号扩展和零扩展输入之间切换,那么你就有了一个可以在有符号和无符号乘法之间切换的 32 位乘法器。这个功能对于有有符号和无符号乘法指令的处理器来说非常有用。

这个算法还使用了算术移位。这是为有符号数字设计的移位。当向右移位数字时,它将最高有效位的旧值移回到 MSB 位置以保持值的符号相同。在 BSV 中,当移动类型为 Int#(n) 的值时会进行算术移位。要对 Bit#(n) 进行算术移位,你可能需要编写类似于 multiply_signed 的函数。此函数将 Bit#(n) 转换为 Int#(n),进行移位,然后再转换回来。

练习 6(4 分): 填写模块

mkBooth的实现,实现一个折叠版本的布斯乘法算法:该模块使用参数化的输入大小n;你的实现应该适用于所有n>= 2。

练习 7(1 分): 填写测试台

mkTbEx7a和mkTbEx7b来测试你的布斯乘法器选择的不同位宽。你可以用以下命令测试它们:$ make Ex7a.tb $ ./simEx7a和

$ make Ex7b.tb $ ./simEx7b

基-4 布斯乘法器

布斯乘法器的另一个优点是它可以通过一次执行原始布斯算法的两个步骤来有效地加速,这相当于每个周期完成两位部分和的加法。这种加速布斯算法的方法被称为基-4 布斯乘法器。

基-4 布斯乘法器在编码乘数时一次查看两个当前位。由于基-4 乘法器每次编码可以减少到不超过一个非零位的布斯编码,因此它能比原始的布斯乘法器运行得更快。例如,位 01 在之前(较不重要的)0位之后被转换为原始布斯编码的 +-。+- 表示 2^(i+1) - 2^i,等于 2^i,即 0+。下表显示了基-4 布斯编码的一个情况(你将在下一个讨论问题中填写其余的表格)。

| 当前位 | 前一位 | 原始布斯编码 | 基-4 布斯编码 |

|---|---|---|---|

| 00 | 0 | ||

| 00 | 1 | ||

| 01 | 0 | +- | 0+ |

| 01 | 1 | ||

| 10 | 0 | ||

| 10 | 1 | ||

| 11 | 0 | ||

| 11 | 1 |

讨论问题 4(1 分): 在

discussion.txt中填写上表。基-4 布斯编码中不应有超过一个非零符号。

下面是一个基-4 布斯乘法器的伪代码:

初始化:

// 所有位宽 2n + 2

m_pos = {msb(m), m, 0}

m_neg = {msb(-m), (-m), 0}

p = {0, r, 1'b0}

重复 n/2 次:

let pr = p 的最低三位

if ( pr == 3'b000 ): 无操作;

if ( pr == 3'b001 ): p = p + m_pos;

if ( pr == 3'b010 ): p = p + m_pos;

if ( pr == 3'b011 ): p = p + (m_pos << 1);

if ( pr == 3'b100 ): ...

... 根据表格填写剩余部分 ...

算术右移 p 两位;

res = 去掉 p 的最高位和最低位;

练习 8(2 分): 填写模块

mkBoothRadix4的实现,实现一个基-4 布斯乘法器。该模块使用参数化的输入大小n;你的实现应该适用于所有偶数n>= 2。

练习 9(1 分): 填写测试台

mkTbEx9a和mkTbEx9b为你的基-4 布斯乘法器测试不同的偶数位宽。你可以用以下命令测试它们:$ make Ex9a.tb $ ./simEx9a和

$ make Ex9b.tb $ ./simEx9b

讨论问题 5(1 分): 现在考虑将你的布斯乘法器进一步扩展到基-8 布斯乘法器。这就像在单个步骤中执行基-2 布斯乘法器的 3 个步骤。所有基-8 布斯编码是否可以像基-4 布斯乘法器那样只用一个非零符号来表示?你认为制作基-8 布斯乘法器还有意义吗?

讨论问题 6(可选): 你花了多久时间来完成这个实验?

当你完成所有练习并且代码工作正常时,提交你的更改到仓库,并将你的更改推送回源。

© 2016 麻省理工学院。版权所有。

实验 3: 快速傅里叶变换管道

实验 3 截止日期: 2016年10月5日星期三,晚上11:59:59 EDT。

实验 3 的交付内容包括:

- 在

Fifo.bsv和Fft.bsv中对练习 1-4 的回答,- 在

discussion.txt中对讨论问题 1-2 的回答。

引言

在本实验中,你将构建不同版本的快速傅里叶变换(FFT)模块,从一个组合 FFT 模块开始。这个模块在之前版本的课程中名为“L0x”的讲座中详细描述,讲座标题为《FFT:复杂组合电路的一个示例》。你可以在以下链接找到该讲座的 [pptx] 或 [pdf]。

首先,你将实现一个折叠的三阶多周期 FFT 模块。这种实现通过阶段间共享硬件来减少所需的面积。接下来,你将实现一个使用寄存器连接各阶段的非弹性流水线 FFT。最后,你将通过使用 FIFO 连接各阶段实现一个弹性流水线 FFT。

守卫

发布的 FFT 讲座假设所有 FIFO 上都有守卫。enq、deq 和 first 上的守卫防止包含这些方法调用的规则在方法的守卫不满足时触发。因此,讲座中的代码在不检查 FIFO 是否 notFull 或 notEmpty 的情况下使用 enq、deq 和 first。

方法上的守卫语法如下所示:

method Action myMethodName(Bit#(8) in) if (myGuardExpression);

// 方法体

endmethod

myGuardExpression 是一个表达式,当且仅当调用 myMethodName 有效时才为 True。如果 myMethodName 将在下次触发时在规则中使用,那么规则将被阻止执行,直到 myGuardExpression 为 True。

练习 1(5 分): 作为热身,为包含在

Fifo.bsv中的两元素无冲突 FIFO 的enq、deq和first方法添加守卫。

数据类型

提供了多种数据类型以帮助实现 FFT。提供的默认设置描述了一个与 64 个不同的 64 位复数输入向量一起工作的 FFT 实现。64 位复数数据的类型定义为 ComplexData。FftPoints 定义了复数的数量,FftIdx 定义了访问向量中一个点所需的数据类型,NumStages 定义了阶段数,StageIdx 定义了访问特定阶段的数据类型,BflysPerStage 定义了每个阶段中蝴蝶单元的数量。这些类型参数为你提供了方便,你可以在实现中自由使用这些类型。

应该注意的是,这个实验的目标不是理解 FFT 算法,而是在一个真实世界的应用中尝试不同的控制逻辑。getTwiddle 和 permute 函数为你的方便而提供,并包含在测试台中。然而,它们的实现并不严格遵循 FFT 算法,甚至可能会更改。有益的是,不要关注算法

,而是关注如何改变给定数据路径的控制逻辑,以增强其特性。

蝴蝶单元

模块 mkBfly4 实现了讲座中讨论的 4 路蝴蝶函数。这个模块应该完全按照你在代码中使用的次数来实例化。

interface Bfly4;

method Vector#(4,ComplexData) bfly4(Vector#(4,ComplexData) t, Vector#(4,ComplexData) x);

endinterface

module mkBfly4(Bfly4);

method Vector#(4,ComplexData) bfly4(Vector#(4,ComplexData) t, Vector#(4,ComplexData) x);

// 方法体

endmethod

endmodule

FFT 的不同实现

你将实现与以下 FFT 接口对应的模块:

interface Fft;

method Action enq(Vector#(FftPoints, ComplexData) in);

method ActionValue#(Vector#(FftPoints, ComplexData)) deq();

endinterface

模块 mkFftCombinational、mkFftFolded、mkFftInelasticPipeline 和 mkFftElasticPipeline 都应该实现一个与组合模型功能相当的 64 点 FFT。模块 mkFftCombinational 已经提供给你。你的任务是实现其他三个模块,并使用提供的组合实现作为基准来验证它们的正确性。

每个模块都包含两个 FIFO,inFifo 和 outFifo,分别包含输入复数向量和输出复数向量,如下所示。

module mkFftCombinational(Fft);

Fifo#(2, Vector#(FftPoints, ComplexData)) inFifo <- mkCFFifo;

Fifo#(2, Vector#(FftPoints, ComplexData)) outFifo <- mkCFFifo;

...

这些 FIFO 是课堂上展示的两元素无冲突 FIFO,在练习一中添加了守卫。

每个模块还包含一个或多个 mkBfly4 的 Vector,如下所示。

Vector#(3, Vector#(16, Bfly4)) bfly <- replicateM(mkBfly4);

doFft 规则应该从 inFifo 中取出一个输入,执行 FFT 算法,最后将结果入队到 outFifo。这个规则通常需要其他函数和模块才能正确运作。弹性流水线实现将需要多个规则。

...

rule doFft;

// 规则体

endrule

...

Fft 接口提供了方法向 FFT 模块发送数据并从中接收数据。该接口只入队到 inFifo 并从 outFifo 出队。

...

method Action enq(Vector#(FftPoints, ComplexData) in);

inFifo.enq(in);

endmethod

method ActionValue#(Vector#(FftPoints, ComplexData)) deq;

outFifo.deq;

return outFifo.first;

endmethod

endmodule

练习 2(5 分): 在

mkFftFolded中,创建一个折叠的 FFT 实现,总共只使用 16 个蝴蝶单元。这个实现应该在恰好 3 个周期内完成整个 FFT 算法(从出队输入 FIFO 到入队输出 FIFO)。Makefile 可用于构建

simFold来测试此实现。编译并运行使用$ make fold $ ./simFold

练习 3(5 分): 在

mkFftInelasticPipeline中,创建一个非弹性流水线 FFT 实现。这个实现应该使用 48 个蝴蝶单元和 2 个大型寄存器,

每个寄存器携带 64 个复数。这个流水线单元的延迟也必须恰好是 3 个周期,尽管其吞吐量将是每个周期 1 个 FFT 操作。

>

>Makefile 可用于构建 simInelastic 来测试此实现。编译并运行使用

>

> >$ make inelastic >$ ./simInelastic >

练习 4(10 分):

在

mkFftElasticPipeline中,创建一个弹性流水线 FFT 实现。这个实现应该使用 48 个蝴蝶单元和两个大型 FIFO。FIFO 之间的阶段应该在它们自己的规则中,这些规则可以独立触发。这个流水线单元的延迟也必须恰好是 3 个周期,尽管其吞吐量将是每个周期 1 个 FFT 操作。Makefile 可用于构建

simElastic来测试此实现。编译并运行使用$ make elastic $ ./simElastic

讨论问题

在实验室存储库提供的文本文件 discussion.txt 中写下你对这些问题的回答。

讨论问题 1 和 2:

假设你被给予一个执行 10 阶段算法的黑盒模块。你不能查看它的内部实现,但你可以通过给它数据并查看模块的输出来测试这个模块。你被告知它是按照本实验中涵盖的结构之一实现的,但你不知道是哪一个。

- 你如何判断模块的实现是折叠实现还是流水线实现? (3 分)

- 一旦你知道模块具有流水线结构,你如何判断它是非弹性的还是弹性的? (2 分)

讨论问题 3(可选): 你花了多长时间来完成这个实验?

当你完成所有练习并且代码工作正常时,提交你的更改到仓库,并将你的更改推送回源。

奖励

作为额外的挑战,实现讲座中最后几张可选幻灯片中介绍的多态超折叠 FFT 模块。这个超折叠 FFT 模块在给定有限数量的蝴蝶单元(1、2、4、8 或 16 个蝴蝶单元)的情况下执行 FFT 操作。蝴蝶单元数量的参数由 radix 给出。由于 radix 是一个类型变量,我们必须在模块的接口中引入它,因此我们定义了一个名为 SuperFoldedFft 的新接口,如下所示:

interface SuperFoldedFft#(radix);

method Action enq(Vector#(64, ComplexData inVec));

method ActionValue#(Vector#(64, ComplexData)) deq;

endinterface

我们还必须在模块 mkFftSuperFolded 中声明 provisos,以通知 Bluespec 编译器 radix 和 FftPoints 之间的算术约束(即 radix 是 FftPoints/4 的一个因数)。

我们最终使用 4 个蝴蝶单元实例化了一个超折叠流水线模块,该模块实现了正常的 Fft 接口。这个模块将用于测试。我们还向你展示了将 SuperFoldedFft#(radix, n) 接口转换为 `Fft

` 接口的函数。

Makefile 可用于构建 simSfol 来测试此实现。编译并运行使用

make sfol

./simSfol

为了做超折叠 FFT 模块,首先尝试编写一个只有 2 个蝴蝶单元的超折叠 FFT 模块,没有任何类型参数。然后尝试推广设计以使用任意数量的蝴蝶单元。

© 2016 麻省理工学院。版权所有。

实验 4: N 元 FIFOs

实验 4 截止日期: 2016年10月12日,星期三,晚上11:59:59 EDT。

实验 4 的交付内容包括:

- 在

MyFifo.bsv中对练习 1-4 的回答- 在

discussion.txt中对讨论问题 1-4 的回答

引言

本实验聚焦于设计各种 N 元素 FIFO,包括无冲突 FIFO。无冲突 FIFO 是流水线设计的重要工具,因为它们允许流水线阶段被连接,而不引入额外的调度约束。

创建一个无冲突的 FIFO 是困难的,因为你需要创建不会相互冲突的入队和出队方法。不是无冲突的 FIFO,如流水线和旁路 FIFO,假设了入队和出队的顺序。流水线 FIFO 假设在入队前完成出队,而旁路 FIFO 假设在出队前完成入队。仅用 EHRs 来实现流水线和旁路 FIFOs,并用 EHRs 加上规范化规则来创建无冲突 FIFO。

参数化大小 FIFO 的功能

在讲座中,你已经看到了一个两元素无冲突 FIFO 的实现。这个模块利用 EHRs 和一个规范化规则来实现无冲突的入队和出队方法。出队仅从第一个寄存器读取,而入队仅写入第二个寄存器。如果需要,规范化规则会将第二个寄存器的内容移动到第一个寄存器。这种结构对于小型 FIFO 如两元素 FIFO 来说效果很好,但对于更大的 FIFO 来说使用过于复杂。

要实现更大的 FIFO,你可以使用循环缓冲区。

图 1 显示了在循环缓冲区中实现的 FIFO。这个 FIFO 包含数据 [1, 2, 3],1 在前端,3 在后端。指针 deqP 指向 FIFO 的前端,enqP 指向 FIFO 后的第一个空闲位置。

| 索引 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| 数据 | - | - | 1 | 2 | 3 | - |

| ↑ | ↑ | |||||

| 指针 | deqP | enqP |

图 1:实现在循环缓冲区中的 6 元素 FIFO 示例。这个 FIFO 包含 [1, 2, 3]。

在循环缓冲区中实现的 FIFO 中,入队只是在 enqP 的位置写入,并将 enqP 增加 1。将值 4 入队到示例 FIFO 的结果可见于图 2。

| 索引 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| 数据 | - | - | 1 | 2 | 3 | 4 |

| ↑ | ↑ | |||||

| 指针 | enqP | deqP |

图 2:入队 4 后的 6 元素 FIFO。这个 FIFO 包含 [1, 2, 3, 4]。

出队则更为简单

。出队时,只需将 deqP 增加 1。从示例 FIFO 出队一个值的结果可见于图 3。请注意数据并未被移除。虽然值 1 仍存储在 FIFO 的寄存器中,但它处于无效空间,因此用户再也看不到它。FIFO 图中的所有 - 都是指之前曾在 FIFO 中但现已无效的旧数据。这个 FIFO 结构中没有有效位。位置在出队指针之后但在入队指针之前时有效。这为判断 FIFO 是满还是空增加了一些复杂性。

| 索引 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| 数据 | - | - | 1 | 2 | 3 | 4 |

| ↑ | ↑ | |||||

| 指针 | enqP | deqP |

图 3:出队一个元素后的 6 元素 FIFO。这个 FIFO 包含 [2, 3, 4]。

考虑图 4 中的 FIFO 状态。这个图显示了一个 FIFO,其中 enqP 和 deqP 指针指向同一个元素。这个 FIFO 是满的还是空的?除非你有更多的信息,否则无法判断。为了跟踪当指针重叠时 FIFO 的状态,我们将有一个寄存器表示 FIFO 是否满,另一个表示 FIFO 是否空。图 5 显示了一个满 FIFO,附加的寄存器跟踪满和空的状态。

| 索引 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| 数据 | 3 | 9 | 6 | 2 | 0 | 3 |

| ↑ ↑ | ||||||

| 指针 | enqP deqP |

图 4:6 元素 FIFO 满还是空。

| 索引 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| 数据 | 3 | 9 | 6 | 2 | 0 | 3 |

| ↑ ↑ | ||||||

| 指针 | enqP deqP | |||||

| 大小 | full: | True | empty: | False |

图 5:6 元素 FIFO 满。

清空的 FIFO 将会使 enqP 和 deqP 指向同一位置,其中 empty 为 True 而 full 为 False。

如果 enqP 或 deqP 指向同一位置,empty 或 full 其中之一应为真。当一个指针移动到另一个指针的位置时,根据移动指针的方法,FIFO 需要设置 empty 或 full 信号。如果执行了入队操作,full 应该为真。如果执行了出队操作,empty 应该为真。

N 元素 FIFO 的实现细节

本节深入探讨了如何在 Bluespec 中以循环缓冲区的形式实现 N 元素 FIFO。

数据结构

FIFO 将具有 n 元素的寄存器向量来存储 FIFO 中的数据。这个 FIFO 应该能够使用参数类型 t 工作,因此寄存器将是 Reg#(t) 类型。

指针

FIFO 将具有入队和出队操作的指针。这些指针,enqP 和 deqP,指向下一次操作将发生的位置。入队指针指向所有有效数据之后的下一个元素,而出队指针指向有效数据的前端。这些指针将是 Bit#(TLog#(n)) 类型的寄存器值。TLog#(n) 是对应于数值类型 n 的值的基数 2 对数的上限的数值类型。简单来说,TLog#(n) 是从 0 到 n-1 计数所需的位数。

状态标志

FIFO 还将伴随入队和出队指针具有两个状态标志:full 和 empty。这些寄存器在 enqP 不等于 deqP 时都为假,但当 enqP 和 deqP 相等时,要么 full 要么 empty 为真,表达 FIFO 的状态。

接口方法

此 FIFO 将保持与课堂上介绍的以前的 FIFO 相同的接口。

interface Fifo#(numeric type n, type t);

method Bool notFull;

method Action enq(t x);

method Bool notEmpty;

method Action deq;

method t first;

method Action clear;

endinterface

数据类型为 t,大小为数值类型 n。

-

NotFull

notFull方法返回内部 full 信号的否定。 -

Enq

enq方法将数据写入入队指针指向的位置,增加入队指针,并在必要时更新 empty 和 full 值。如果无法入队,此方法应通过守卫被阻塞。 -

NotEmpty

notEmpty方法返回内部 empty 信号的否定。 -

Deq

deq方法增加出队指针,并在必要时更新 empty 和 full 值。如果无法出队,此方法应通过守卫被阻塞。 -

First

first方法返回出队指针指向的元素,只要 FIFO 不为空。如果 FIFO 为空,此方法应通过守卫被阻塞。 -

Clear

clear方法将入队和出队指针设置为 0,并通过设置内部 full 和 empty 信号的适当值将 FIFO 状态设置为空。

方法排序

根据实施的 FIFO 类型,enq 和 deq 可能以任何顺序、固定顺序触发,或者它们可能无法在同一周期触发。通常与 enq 和 deq 相关联的方法应该能够与各自的方法触发。即 notFull 应该能够

与 enq 触发,同样 notEmpty 和 first 应该能够与 deq 触发。在所有情况下,clear 方法应该优先于所有其他方法,因此它会看起来最后发生。

测试基础设施

这个实验室有两套测试台:功能测试台和调度测试台。

功能测试台将您的 FIFO 实现与参考 FIFO 进行比较。测试台随机入队和出队数据,并确保两个 FIFO 的所有输出结果相同。这些参考 FIFO 是作为内置 BSV FIFO 的包装实现的。

调度测试台的工作方式与迄今为止的所有其他测试台不同。调度测试台不是用来运行的,它们只是用来编译的。这些测试台强制执行您的 FIFO 应该能够满足的调度。如果测试台编译时没有警告,则您的 FIFO 能够满足这些调度,并且它们通过了测试。如果您的 FIFO 无法满足调度,将在编译期间产生编译器警告或错误。这些信息将表明两个规则在测试台中不能一起触发,或者某些规则的条件取决于该规则的触发。

查看编译器输出时,确保通过找到显示

code generation for <module_name> starts

的行来查看哪个模块导致了错误。由于 Bluespec 编译器的使用方式,无论何时构建一个测试台,都会部分编译所有测试台,因此您可能会看到与您当前不关注的模块相关的警告。

实现 N 元素 FIFOs

有冲突的 FIFOs

首先,您将实现一个只使用寄存器的 N 元素 FIFO。这将导致 enq 和 deq 发生冲突,但它将为所有后续的 FIFO 设计提供一个起点。

练习 1(5 分): 在

MyFifo.bsv中实现mkMyConflictFifo。您可以通过运行以下命令来构建和运行功能测试台$ make conflict $ ./simConflictFunctional由于预期

enq和deq会发生冲突,因此此模块没有调度测试台。

现在我们已经有了一个初始的有冲突 FIFO,我们将看看它的冲突并构建它的冲突矩阵。

讨论问题 1(5 分): 每个接口方法中都读取和写入了哪些寄存器?记住,守卫中进行的寄存器读取也算数。

讨论问题 2(5 分): 为

mkMyConflictFifo填写冲突矩阵。为简化起见,将对同一寄存器的写入视为冲突(不仅仅是在单个规则内冲突)。

流水线和旁路 FIFOs

流水线和旁路 FIFO 是有冲突 FIFO 的下一步。流水线和旁路 FIFO 通过声明它们与它们各自的方法之间的固定顺序,使并发入队和出队成为可能。

流水线 FIFO 具有以下调度注释。

{notEmpty, first, deq} < {notFull, enq} < clear

旁路 FIFO 具有以下调度注释。

{notFull, enq} < {notEmpty, first, deq} < clear

用 EHRs 创建排序关系

有一个结构化的程序可以使用 EHRs 从一个有冲突的设计中获取这些调度注释。

- 用 EHRs 替换有冲突的寄存器。

- 分配 EHRs 的端口以匹配所需的调度。第一组方法访问端口 0,第二组访问端口 1,等等。

例如,要获取调度注释

{notEmpty, first, deq} < {notFull, enq} < clear

首先将阻止上述调度注释的寄存器替换为 EHRs。在这种情况下,包括 enqP, deqP, full, 和 empty。现在,分配 EHRs 的端口以匹配所需的调度。{notEmpty, first, deq} 都获得端口 0,{notFull, enq} 获得端口 1,而 clear 获得端口 2。你可以通过减少未使用端口的 EHRs 的大小来稍微优化这个设计,但这对于本实验室的目的并不必要。

练习 2(10 分): 在

MyFifo.bsv中使用上述方法和 EHRs 实现mkMyPipelineFifo和mkMyBypassFifo。您可以通过运行以下命令来构建流水线 FIFO 和旁路 FIFO 的功能和调度测试台$ make pipeline和

$ make bypass分别。如果这些编译没有调度警告,那么调度测试台通过了,这两个 FIFO 具有预期的调度行为。要测试它们的功能是否符合参考实现,你可以运行

$ ./simPipelineFunctional和

$ ./simBypassFunctional如果您在实现

clear时遇到符合正确的调度和功能的困难,您可以通过将has_clear设置为 false 来暂时从关联模块中的TestBench.bsv中删除它。

无冲突 FIFOs

无冲突 FIFO 是最灵活的 FIFO。它可以放置在处理器流水线中,而不增加阶段之间的额外调度约束。无冲突 FIFO 的理想调度注释如下所示。

{notFull, enq} CF {notEmpty, first, deq}

{notFull, enq, notEmpty, first, deq} < clear

选择 clear 方法不与 enq 和

deq 无冲突,因为它在其他方法之前被赋予了优先权。如果 clear 和 enq 在同一个周期内发生,clear 方法将有优先权,并且在下一个周期 FIFO 将为空。要匹配使用方法顺序的行为,clear 在 enq 和 deq 之后。

使用 EHRs 创建无冲突的调度

就像为流水线和旁路 FIFOs 的程序一样,有一个程序可以使用 EHRs 获取所需的无冲突调度注释。

- 对于每个需要与另一方法无冲突的有冲突的

Action和ActionValue方法,添加一个 EHR 来表示对该方法的调用请求。如果方法没有参数,EHR 中的数据类型应该是Bool(True 表示请求,False 表示无请求)。如果方法有一个类型为t的参数,则 EHR 的数据类型应该是Maybe#(t)(tagged Valid x表示带参数x的请求,tagged Invalid表示无请求)。如果方法有类型为t1,t2等的参数,则 EHR 的数据类型应该是Maybe#(TupleN#(t1,t2,...))。 - 用新添加的 EHR 替换每个有冲突的

Action和ActionValue方法中的动作。 - 创建一个规范化规则,从 EHRs 中获取请求并执行每个方法中曾经的动作。这个规范化规则应该在每个周期结束后触发,所有其他方法之后。

使用编译器属性强制规则触发

BSV 没有强制规范化规则每个周期都触发的方法,但它可以在编译时静态检查它是否会在每个周期触发。通过使用编译器属性,你可以向 Bluespec 编译器添加有关模块、方法、规则或函数的额外信息。你已经看到了 (* synthesize *) 属性,现在你将学习另外两个用于规则的属性。

正如你所知,规则或方法的守卫是显式守卫和隐式守卫的组合。属性 (* no_implicit_conditions *) 放在规则前面,告诉编译器你不希望规则体中有任何隐式守卫(编译器称守卫为条件)。如果你错了,并且规则中确实存在隐式守卫,编译器将在编译时抛出错误。这个守卫充当了断言,即 CAN_FIRE 等于显式守卫。

另一阻止规则触发的可能是与其他规则和方法的冲突。属性 (* fire_when_enabled *) 放在一个规则前面,告诉编译器只要规则的守卫满足,规则就应该触发。如果存在守卫满足而规则不触发的情况,那么编译器会在编译时抛出错误。这个守卫充当了断言,即 WILL_FIRE 等于 CAN_FIRE。

这两个属性一起使用会断言只要你的显式守卫为真,规则就会触发。如果你的显式守卫是真(或为空),那么它就断言规则将在每个周期触发。下面是两个属性一起使用的例子:

(* no_implicit_conditions *)

(* fire_when_enabled *)

rule firesEveryCycle;

// 规则体

endrule

(* no_implicit_conditions, fire_when_enabled *)

rule alsoFiresEveryCycle;

// 规则体

endrule

如果规则 fireEveryCycle 实际上不能每个周期都触发,Bluespec 编译器将抛出错误。你应该将这些属性放在你的规范化规则之上,以确保它每个周期都触发。

讨论问题 3(5 分): 使用

mkMyConflictFifo的冲突矩阵,哪些冲突不符合上述无冲突 FIFO 的调度约束?

练习 3(30 分): 按照上述描述实现

mkMyCFFifo,但不包括 clear 方法。您可以通过运行以下命令构建功能和调度测试台$ make cfnc如果这些编译没有调度警告,那么调度测试台通过了,FIFO 的

enq和deq方法可以以任何顺序调度。(如果有警告说规则m_maybe_clear没有动作将被移除,也是可以接受的。)你可以通过运行以下命令来运行功能测试台$ ./simCFNCFunctional

向无冲突 FIFO 添加 clear 方法

添加 clear 方法增加了设计的复杂性。它需要调度约束来防止 clear 在 enq 和 deq 之前被调度,但它不能与规范化规则发生冲突。

创建方法之间调度约束的最简单方法之一是让一个方法写入 EHR,另一个方法从 EHR 的后面端口读取。在这种情况下,你应该能够使用现有的 EHR 来强

使用编译器属性强制规则触发

BSV 没有强制规范化规则每个周期都触发的方法,但它可以在编译时静态检查它是否会在每个周期触发。通过使用编译器属性,你可以向 Bluespec 编译器添加有关模块、方法、规则或函数的额外信息。你已经看到了 (* synthesize *) 属性,现在你将学习另外两个用于规则的属性。

正如你所知,规则或方法的守卫是显式守卫和隐式守卫的组合。属性 (* no_implicit_conditions *) 放在规则前面,告诉编译器你不希望规则体中有任何隐式守卫(编译器称守卫为条件)。如果你错了,并且规则中确实存在隐式守卫,编译器将在编译时抛出错误。这个守卫充当了断言,即 CAN_FIRE 等于显式守卫。

另一阻止规则触发的可能是与其他规则和方法的冲突。属性 (* fire_when_enabled *) 放在一个规则前面,告诉编译器只要规则的守卫满足,规则就应该触发。如果存在守卫满足而规则不触发的情况,那么编译器会在编译时抛出错误。这个守卫充当了断言,即 WILL_FIRE 等于 CAN_FIRE。

这两个属性一起使用会断言只要你的显式守卫为真,规则就会触发。如果你的显式守卫是真(或为空),那么它就断言规则将在每个周期触发。下面是两个属性一起使用的例子:

(* no_implicit_conditions *)

(* fire_when_enabled *)

rule firesEveryCycle;

// 规则体

endrule

(* no_implicit_conditions, fire_when_enabled *)

rule alsoFiresEveryCycle;

// 规则体

endrule

如果规则 fireEveryCycle 实际上不能每个周期都触发,Bluespec 编译器将抛出错误。你应该将这些属性放在你的规范化规则之上,以确保它每个周期都触发。

讨论问题 3(5 分): 使用

mkMyConflictFifo的冲突矩阵,哪些冲突不符合上述无冲突 FIFO 的调度约束?

练习 3(30 分): 按照上述描述实现

mkMyCFFifo,但不包括 clear 方法。您可以通过运行以下命令构建功能和调度测试台$ make cfnc如果这些编译没有调度警告,那么调度测试台通过了,FIFO 的

enq和deq方法可以以任何顺序调度。(如果有警告说规则m_maybe_clear没有动作将被移除,也是可以接受的。)你可以通过运行以下命令来运行功能测试台$ ./simCFNCFunctional

向无冲突 FIFO 添加 clear 方法

添加 clear 方法增加了设计的复杂性。它需要调度约束来防止 clear 在 enq 和 deq 之前被调度,但它不能与规范化规则发生冲突。

创建方法之间调度约束的最简单方法之一是让一个方法写入 EHR,另一个方法从 EHR 的后面端口读取。在这种情况下,你应该能够使用现有的 EHR 来强

制这种调度约束。

练习 4(10 分): 向

mkMyCFFifo添加clear()方法。它应该在所有其他接口方法之后,并在规范化规则之前。您可以通过运行以下命令构建功能和调度测试台$ make cf如果这些编译没有调度警告,则调度测试台通过,FIFO 具有预期的调度行为。你可以通过运行

$ ./simCFFunctional来运行功能测试台。

讨论问题 4(5 分): 在设计

clear()方法时,您是如何强制执行调度约束{enq, deq} < clear的?

讨论问题 5(可选): 您花了多长时间完成这个实验室?

© 2016 麻省理工学院。保留所有权利。

实验 5: RISC-V 引介 - 多周期与两阶段流水线

实验 5截止日期:2016年10月24日,美东时间晚上11:59:59。

本实验的交付物包括:

- 在

TwoCycle.bsv、FourCycle.bsv、TwoStage.bsv和TwoStageBTB.bsv中完成练习1-4的答案- 在

discussion.txt中完成讨论问题1-4的答案

引言

本实验介绍了 RISC-V 处理器及其相关工具流。实验从介绍 RISC-V 处理器的单周期实现开始。然后你将创建两周期和四周期的实现,这些实现是由于内存结构危害驱动的。你将完成创建两阶段流水线的实现,使取指和执行阶段并行进行。这种两阶段流水线将成为未来流水线实现的基础。

处理器基础设施

在设置运行、测试、评估性能和调试你的 RISC-V 处理器的基础设施方面,已经为你完成了大量工作,无论是在仿真中还是在 FPGA 上。由于使用的内存类型,本实验的处理器设计无法在 FPGA 上运行。

初始代码

本实验提供的代码包含三个目录:

programs/包含 RISC-V 程序的汇编和 C 语言版本。scemi/包含编译和仿真处理器的基础设施。src/包含 RISC-V 处理器的 BSV 代码。

在 BSV 源文件夹中,有一个 src/includes/ 文件夹,其中包含用于 RISC-V 处理器的所有模块的 BSV 代码。你在本实验中不需要更改这些文件。这些文件简要说明如下。

| 文件名 | 内容 |

|---|---|

Btb.bsv | 分支目标缓冲区地址预测器的实现。 |

CsrFile.bsv | 实现 CSR(包括与主机机器通信的 mtohost)。 |

DelayedMemory.bsv | 实现具有一周期延迟的内存。 |

DMemory.bsv | 使用大型寄存器文件实现数据内存,具有组合读写功能。 |

Decode.bsv | 指令解码的实现。 |

Ehr.bsv | 如讲座中所述,使用 EHRs 实现。 |

Exec.bsv | 指令执行的实现。 |

Fifo.bsv | 如讲座中所述,使用 EHRs 实现各种 FIFO。 |

IMemory.bsv | 使用大型寄存器文件实现具有组合读功能的指令内存。 |

MemInit.bsv | 模块用于从主机 PC 下载指令和数据存储器的初始内容。 |

MemTypes.bsv | 与内存相关的常见类型。 |

ProcTypes.bsv | 与处理器相关的常见类型。 |

RFile.bsv | 寄存器文件的实现。 |

Types.bsv | 常见类型。 |

SceMi 设置

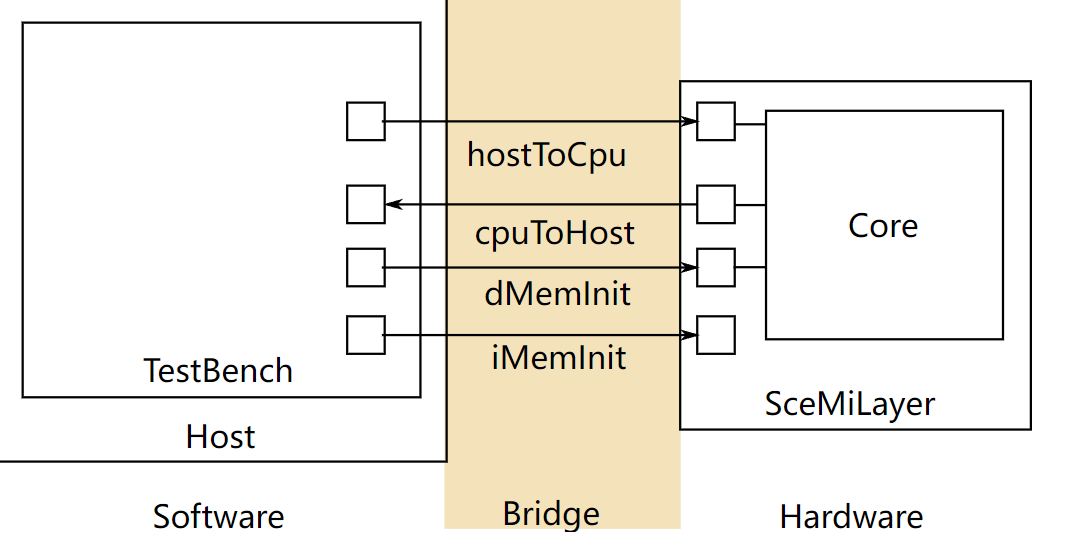

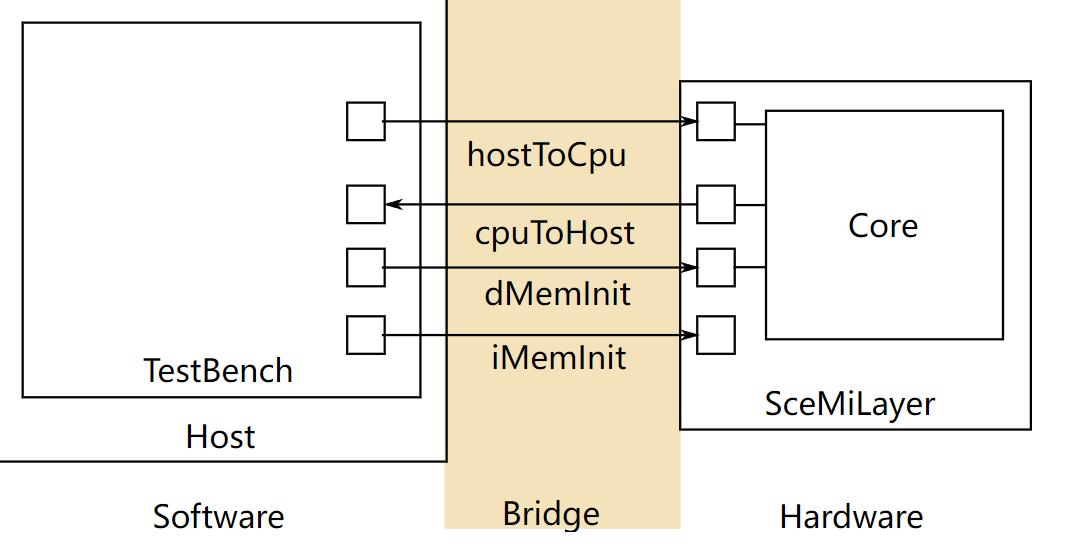

|

|---|

| 图 1: SceMi 设置 |

图 1 显示了本实验的 SceMi 设置。在设计和调试处理器时,我们经常需要另一个处理器的帮助,我们称之为宿主处理器(图 1 中标记为 "Host")。为了与宿主区分,我们

可以将你设计的处理器(图 1 中标记为 "Core")称为目标处理器。SceMiLayer 实例化来自指定处理器 BSV 文件的处理器和 SceMi 端口,用于处理器的 hostToCpu、cpuToHost、iMemInit 和 dMemInit 接口。SceMiLayer 还提供了一个 SceMi 端口,用于从测试台重置核心,允许在处理器上运行多个程序,而无需重新配置 FPGA。

由于我们只在本实验中在仿真中运行处理器,我们将绕过通过 iMemInit 和 dMemInit 接口初始化内存的耗时阶段。相反,我们将在仿真开始时直接使用内存初始化文件(.vmh 文件,介绍在编译汇编测试和基准测试中)加载内存所需的值,并将为每个程序重新启动仿真。

SceMiLayer 和 Bridge 的源代码位于 scemi/ 目录中。SceMi 链接在仿真时使用 TCP 桥,在实际 FPGA 上运行时使用 PCIe 桥。

构建项目

文件 scemi/sim/project.bld 描述了如何使用 build 命令构建项目,该命令是 Bluespec 安装的一部分。运行

build --doc

以获取有关 build 命令的更多信息。可以通过在 scemi/sim/ 目录中运行以下命令从头开始重新构建整个项目,其中 <proc_name> 是本实验指南中指定的处理器名称之一。这将覆盖之前通过 build 调用生成的可执行文件。

旁注:单独运行

build -v会打印一个错误消息,其中包含所有有效的处理器名称。

编译汇编测试和基准测试

我们的 SceMi 测试台运行指定为 Verilog Memory Hex (vmh) 格式的 RISC-V 程序。programs/assembly 目录包含汇编测试的源代码,而 programs/benchmarks 目录包含基准程序的源代码。我们将使用这些程序来测试处理器的正确性和性能。每个目录下都提供了一个 Makefile,用于生成 .vmh 格式的程序。

要编译所有汇编测试,请转到 programs/assembly 目录并运行 make。这将创建一个名为 programs/build/assembly 的新目录,其中包含所有汇编测试的编译结果。其下的 vmh 子目录包含所有的 .vmh 文件,而 dump 子目录包含所有转储的汇编代码。如果您忘记了这样做,将会看到以下错误消息:

-- assembly test: simple --

ERROR: ../../programs/build/assembly/vmh/simple.riscv.vmh does not exit, you need to first compile

同样,转到 programs/benchmarks 直接运行 make 命令来编译所有基准测试。编译结果将位于 programs/build/benchmarks 目录中。

现在编译汇编测试和基准测试。RISC-V 工具链应该能在所有 vlsifarm 机器上运行,但可能不适用于普通的 Athena 集群机器。我们建议您至少最初在 vlsifarm 机器上编译这些程序,然后,您可以使用普通的 Athena 集群机器来完成本实验。

programs/build/assembly/vmh 目录中的 .vmh 文件是汇编测试,它们如下介绍:

| 文件名 | 内容 |

|---|---|

simple.riscv.vmh | 包含汇编测试的 |

基本基础代码,并运行 100 条 NOP 指令("NOP" 代表 "无操作")。 |

| bpred_bht.riscv.vmh | 包含许多分支历史表可以很好预测的分支。 |

| bpred_j.riscv.vmh | 包含许多跳转指令,分支目标缓冲区可以很好地预测。 |

| bpred_ras.riscv.vmh | 包含许多通过寄存器进行的跳转,返回地址栈可以很好地预测。 |

| cache.riscv.vmh | 通过对别名在较小内存中的地址进行读写来测试缓存。 |

| <inst>.riscv.vmh | 测试特定指令。 |

每个汇编测试都会打印周期计数、指令计数和测试是否通过。simple.riscv.vmh 在单周期处理器上的示例输出为

102

103

PASSED

第一行是周期计数,第二行是指令计数,最后一行显示测试通过。指令计数比周期计数大,因为我们在读取周期计数 CSR(cycle)后读取指令计数 CSR(instret)。如果测试失败,最后一行将是

FAILED exit code = <failure code>

可以使用失败代码通过查看汇编测试的源代码来定位问题。

我们强烈建议在对处理器进行任何更改后重新运行所有汇编测试,以验证您没有破坏任何内容。当试图定位错误时,运行汇编测试将缩小问题指令的可能性。

programs/build/benchmarks/ 中的基准测试评估处理器的性能。这些基准测试简要介绍如下:

| 文件名 | 功能 |

|---|---|

median.riscv.vmh | 一维三元素中值过滤器。 |

multiply.riscv.vmh | 软件乘法。 |

qsort.riscv.vmh | 快速排序。 |

towers.riscv.vmh | 汉诺塔。 |

vvadd.riscv.vmh | 向量-向量加法。 |

每个基准测试都会打印其名称、周期计数、指令计数、返回值以及是否通过。单周期处理器上中值基准测试的示例输出为

Benchmark median

Cycles = 4014

Insts = 4015

Return 0

PASSED

如果基准测试通过,最后两行应为 Return 0 和 PASSED。如果基准测试失败,最后一行将是

FAILED exit code = <failure code>

性能以每周期指令数 (IPC) 衡量,我们通常希望提高 IPC。对于我们的流水线,IPC 永远不会超过 1,但我们应该能够通过良好的分支预测器和适当的旁路接近它。

使用测试台

我们的 SceMi 测试台是在宿主处理器上运行的软件,通过 SceMi 链接与 RISC-V 处理器交互,如图 1 所示。测试台启动处理器并处理 toHost 请求,直到处理器表明已成功或未成功完成。例如,测试输出中的周期计数实际上是处理器发出的 toHost 请求,请求打印一个整数,测试台通过打印该整数来处理这些请求。测试输出的最后一行(即 PASSED 或 FAILED)也是由测试台根据指示处理完成的 toHost 请求打印出来的。

要运行测试台,请首先按照[构建项目](http://csg.csail.mit.edu

/6.175/archive/2016/labs/lab5-riscv-intro.html#build)中的描述构建项目,并按照编译汇编测试和基准测试中的描述编译 RISC-V 程序。对于仿真,将创建可执行文件 bsim_dut,在启动测试台时应运行此文件。在仿真中,我们的 RISC-V 处理器总是加载文件 scemi/sim/mem.vmh 来初始化(数据)内存。因此,我们只需要复制我们想要运行的测试程序的 .vmh 文件(对应于指令内存)即可。

例如,要在仿真中在处理器上运行中值基准测试,你可以在 scemi/sim 目录下使用以下命令:

cp ../../programs/build/benchmarks/vmh/median.riscv.vmh mem.vmh

./bsim_dut > median.out &

./tb

为方便起见,我们在 scemi/sim 目录中提供了脚本 run_asm.sh 和 run_bmarks.sh,分别运行所有汇编测试和基准测试。bsim_dut 的标准输出(stdout)将重定向到 logs/<test name>.log 文件。

测试台输出

RISC-V 仿真有两个输出来源。这些包括 BSV $display 语句(包括消息和错误)和 RISC-V 打印语句。

BSV $display 语句由 bsim_dut 打印到 stdout。BSV 还可以使用 $fwrite(stderr, ...) 语句将内容打印到标准错误(stderr)。run_asm.sh 和 run_bmarks.sh 脚本将 bsim_dut 的 stdout 重定向到 logs/<test name>.log 文件。

RISC-V 打印语句(例如,programs/benchmarks/common/syscall.c 中的 printChar、printStr 和 printInt 函数)通过将字符和整数移至 mtohost CSR 来处理。测试台从 cpuToHost 接口读取,并在接收到字符和整数时将它们打印到 stderr。

练习 0(0 分): 通过转到

programs/assembly和programs/benchmarks目录并运行make编译测试程序。在scemi/sim目录中编译单周期 RISC-V 实现并通过以下命令测试它:$ build -v onecycle $ ./run_asm.sh $ ./run_bmarks.sh在编译 BSV 代码(即

build -v onecycle)期间,你可能会看到许多警告,出现在 "code generation for mkBridge starts" 之后。这些警告针对的是 SceMi 基础设施,通常你不需要关心它们。

实用提示: 在

scemi/sim目录中运行$ ./clean将删除使用

build构建的任何文件。

应对 AFS 超时问题

在运行构建工具时,AFS 超时错误可能如下所示:

...

code generation for mkBypassRFile starts

Error: Unknown position: (S0031)

Could not write the file `bdir_dut/mkBypassRFile.ba':

timeout

tee: ./onecycle_compile_for_bluesim.log: Connection timed out

!!! Stage compile_for_bluesim command encountered an error -- aborting build.

!!! Look in the log file at ./onecycle_compile_for_bluesim.log for more information.

由于各种原因,AFS 可能会超时,导致你的 Bluespec 构建失败。我们可以将构建目录移到 AFS 之外的位置,这可以缓解这个问题。首先,在 /tmp 中创建一个目

录:

mkdir /tmp/<your_user_name>-lab5

然后,打开 scemi/sim/project.bld,你会发现以下行:

[common]

hide-target

top-module: mkBridge

top-file: ../Bridge.bsv

bsv-source-directories: ../../scemi ../../src ../../src/includes

verilog-directory: vlog_dut

binary-directory: bdir_dut

simulation-directory: simdir_dut

info-directory: info_dut

altera-directory: quartus

xilinx-directory: xilinx

scemi-parameters-file: scemi.params

将 verilog-directory、binary-directory、simulation-directory 和 info-directory 更改为包含新的临时目录。例如,如果你的用户名是 "alice",你的新文件夹将是:

verilog-directory: /tmp/alice-lab5/vlog_dut

binary-directory: /tmp/alice-lab5/bdir_dut

simulation-directory: /tmp/alice-lab5/simdir_dut

info-directory: /tmp/alice-lab5/info_dut

完成本实验后,请记得删除你的 tmp 目录。如果你忘记了哪个临时目录是你的,查看 project.bld 或使用 ls -l 找到带有你的用户名的那个。

多周期 RISC-V 实现

提供的代码 src/OneCycle.bsv 实现了一个单周期哈佛架构 RISC-V 处理器。(哈佛架构具有独立的指令和数据存储器。)这个处理器能够在一个周期内完成操作,因为它具有独立的指令和数据存储器,且每个存储器在同一周期内对加载给出响应。在本实验的这一部分,你将制作两种不同的多周期实现,这些实现是由更现实的内存结构危害驱动的。

两周期冯·诺依曼架构 RISC-V 实现

哈佛架构的一种替代方案是冯·诺依曼架构。(冯·诺依曼架构也称为普林斯顿架构。)冯·诺依曼架构将指令和数据存储在同一内存中。如果只有一个内存同时保存指令和数据,则存在结构危害(假设内存不能在同一周期内被访问两次)。要解决这个危害,你可以将处理器分成两个周期:指令取取 和 执行。

- 在指令取取阶段,处理器从内存中读取当前指令并对其进行解码。

- 在执行阶段,处理器读取寄存器文件、执行指令、进行 ALU 操作、进行内存操作,并将结果写入寄存器文件。

创建两周期实现时,你将需要一个寄存器来在两个阶段间保持中间数据,以及一个状态寄存器来跟踪当前状态。中间数据寄存器将在指令取取期间被写入,在执行期间被读取。状态寄存器将在指令取取和执行之间切换。为了简化操作,你可以使用提供的 Stage 类型定义作为状态寄存器的类型。

练习 1(15 分): 在

TwoCycle.bsv中实现一个两周期 RISC-V 处理器,使用单一内存来存储指令和数据。已为你提供了单一内存模块mem供使用。通过转到scemi/sim目录并使用以下命令测试此处理器:$ build -v twocycle $ ./run_asm.sh $ ./run_bmarks.sh

四周期 RISC-V 实现,支持内存延迟

一周期和两周期 RISC-V 处理器假设内存具有组合读取功能;即如果你设置读取地址,那么读取的数据将在同一时钟周期内有效。大多数内存的读取具有更长的延迟:首先你设置地址位,然后在下一个时钟周期中读取结果才准备好。如果我们将之前 RISC-V 处理器实现中的内存更改为具有读取延迟的内存,那么我们将引入另一个结构危害:读取的结果不能在执行读取的同一周期中使用。这种结构危害可以通过将处理器进一步分成四个周期来避免:指令取取、指令解码、执行 和 写回。

- 指令取取阶段,如前所述,将地址线设置在内存的

PC上以读取当前指令。 - 指令解码阶段从内存获取指令、解码并读取寄存器。

- 执行阶段进行 ALU 操作,为存储指令写入数据到内存,并为读取指令设置内存地址线。

- 写回阶段从 ALU 获取结果或从内存读取结果(如果有的话)并写入寄存器文件。

这种处理器将需要更多的阶段间寄存器和扩展的状态寄存器。你可以使用修改过的 Stage 类型定义作为状态寄存器的类型。

由 mkDelayedMemory 实现的一周期读取延迟内存。此模块具有一个接口 DelayedMemory,该接口将内存请求和内存响应分离。请求仍以使用 req 方法的相同方式进行,但此方法不再同时返回响应。为了获取上一次读取请求的结果,你必须在稍后的时钟周期中调用 resp 动作值方法。存储请求不会生成任何响应,因此你不应为存储调用 resp 方法。更多细节可以在 src/includes 中的源文件 DelayedMemory.bsv 中找到。

练习 2(15 分):

如上所述,在

FourCycle.bsv中实现一个四周期 RISC-V 处理器。使用已包含在FourCycle.bsv中的延迟内存模块mem作为指令和数据内存。使用以下命令测试此处理器:$ build -v fourcycle $ ./run_asm.sh $ ./run_bmarks.sh

两阶段流水线 RISC-V 实现

虽然两周期和四周期实现允许处理器处理某些结构危害,但它们的性能并不理想。今天的所有处理器都是流水线化的,以提高性能,它们通常具有重复的硬件来避免诸如两周期和四周期 RISC-V 实现中所见的内存危害之类的结构危害。流水线引入了更多的数据和控制危害,处理器必须处理。为了避免数据危害,我们现在只研究两阶段流水线。

两阶段流水线使用两周期实现将工作分成两个阶段的方式,并使用独立的指令和数据内存并行运行这些阶段。这意味着当一条指令正在执行时,下一条指令正在被取出。对于分支指令,下一条指令并不总是已知的。这被称为控制危害。

为了处理这种控制危害,请在取指阶段使用 PC+4 预测器,并在分支错误预测发生

时纠正 PC。ExecInst 的 mispredict 字段在此处将非常有用。

练习 3(30 分):

在

TwoStage.bsv中实现一个两周期流水线 RISC-V 处理器,使用独立的指令和数据内存(具有组合读取功能,就像OneCycle.bsv中的内存一样)。你可以实现非弹性或弹性流水线。使用以下命令测试此处理器:$ build -v twostage $ ./run_asm.sh $ ./run_bmarks.sh

每周期指令数(IPC)

处理器性能通常以每周期指令数 (IPC) 衡量。这个指标是吞吐量的度量,即平均每周期完成的指令数。要计算 IPC,请将完成的指令数除以完成它们所需的周期数。单周期实现的 IPC 为 1.0,但它将不可避免地需要一个长的时钟周期来考虑传播延迟。结果,我们的单周期处理器并不像听起来那么快。两周期和四周期实现分别达到 0.5 和 0.25 的 IPC。

流水线实现的处理器将实现 0.5 到 1.0 IPC 之间的某处。分支错误预测会降低处理器的 IPC,因此你的 PC+4 下一地址预测器的准确性对于拥有高 IPC 的处理器至关重要。

讨论问题 1(5 分): 对于

run_bmarks.sh脚本测试的每个基准测试,两阶段流水线处理器的 IPC 是多少?

讨论问题 2(5 分): 从 IPC 计算下一地址预测器准确性的公式是什么?(提示,当 PC+4 预测正确时,执行一条指令需要多少周期?当预测错误时呢?)使用这个公式,每个基准测试的 PC+4 下一地址预测器的准确性是多少?

下一地址预测

现在,让我们使用更高级的下一地址预测器。其中一个例子是分支目标缓冲区 (BTB)。它根据当前的程序计数器 (PC) 的值预测要取出的下一条指令的位置。对于绝大多数指令来说,这个地址是 PC + 4(假设所有指令都是 4 字节)。但是,对于跳转和分支指令来说,情况并非如此。因此,BTB 包含了之前使用过的不仅仅是 PC+4 的下一个地址(“分支目标”)的表,以及生成这些分支目标的 PC。

Btb.bsv 包含了一个 BTB 的实现。其接口有两个方法:predPc 和 update。方法 predPc 接受当前 PC 并返回一个预测。方法 update 接受一个程序计数器和该程序计数器的指令的下一个地址,并将其添加为预测(如果不是 PC+4 的话)。

应当调用 predPc 方法来预测下一个 PC,而在分支解析后应调用 update 方法。执行阶段需要当前指令的 PC 和预测的 PC 来解析分支,因此你需要在流水线寄存器或 FIFO 中存储这些信息。

ExecInst 的 mispredict 和 addr 字段在这里将非常有用。需要注意的是,addr 字段并不总是下一条指令的正确 PC——它将是内存加载和存储的地址。我们可以进行高级推理,得出加载和存储从不出现错误的下一 PC 预

测,或者我们可以在执行阶段检查指令类型以得出下一 PC。

练习 4(10 分): 在

TwoStageBTB.bsv中,为你的两周期流水线 RISC-V 处理器添加一个 BTB。BTB 模块已在给定代码中实例化。使用以下命令测试此处理器:$ build -v twostagebtb $ ./run_asm.sh $ ./run_bmarks.sh

讨论问题 3(5 分): 使用 BTB 的两阶段流水线处理器的 IPC 是多少,对于

run_bmarks.sh脚本测试的每个基准测试而言,它有多大改进?

讨论问题 4(5 分): 添加 BTB 如何改变

bpred_*微基准测试的性能?(提示:bpred_j的周期数应该减少。)

讨论问题 5(可选): 完成这个实验你花了多长时间?

完成后记得使用 git push 推送你的代码。

额外讨论问题

讨论问题 6(5 额外分): 查看

bpred_*基准测试的汇编源代码并解释为什么每个基准测试改进、保持不变或变得更糟。

讨论问题 7(5 额外分): 你会如何改进 BTB 以改善

bpred_bht的结果?

© 2016 麻省理工学院。保留所有权利。

实验 6: 具有六阶段流水线和分支预测的 RISC-V 处理器

实验 6截止日期: 2016年11月7日,美东时间晚上11:59:59。

本实验的交付物为:

- 在

SixStage.bsv、Bht.bsv和SixStageBHT.bsv中完成的练习1至4的答案- 在

discussion.txt中完成的讨论问题1至9的答案

引言

本实验是你对现实中的 RISC-V 流水线和分支预测的介绍。在本实验结束时,你将拥有一个具有多种地址和分支预测器协同工作的六级 RISC-V 流水线。

注意:在本实验中,我们使用一位全局时期(而非无限分布时期)来终止错误路径指令。请学习全局时期的幻灯片:[pptx] [pdf],以理解全局时期方案。幻灯片的内容也将在教程中讲解。

实验设施的新增部分

新包含的文件

以下文件出现在 src/includes/ 中:

| 文件名 | 描述 |

|---|---|

FPGAMemory.bsv | FPGA上常见的块 RAM 的封装器。它的接口与上一个实验中的 DelayedMemory 相同。 |

SFifo.bsv | 三种可搜索的 FIFO 实现:基于流水线 FIFO、基于旁路 FIFO 和基于无冲突 FIFO 的实现。所有实现都假设在 enq 之前立即完成搜索。 |

Scoreboard.bsv | 基于可搜索 FIFO 的三种记分牌实现。流水线记分牌使用流水线可搜索 FIFO,旁路记分牌使用旁路可搜索 FIFO,无冲突记分牌使用无冲突可搜索 FIFO。 |

Bht.bsv | 一个空文件,在其中你将实现一个分支历史表(BHT)。 |

新的汇编测试

以下文件出现在 programs/assembly/src 中:

| 文件名 | 描述 |

|---|---|

bpred_j_noloop.S | 与 bpred_j.S 类似的汇编测试,但移除了外部循环。 |

新的源文件

以下文件出现在 src/ 中:

| 文件名 | 描述 |

|---|---|

TwoStage.bsv | 包含一个两级流水线的 RISC-V 处理器的初始文件。此处理器使用 BTB 进行地址预测。使用 twostage 目标编译。 |

SixStage.bsv | 一个空文件,在其中你将把两级流水线扩展为六级流水线。使用 sixstage 目标编译。 |

SixStageBHT.bsv | 一个空文件,在其中你将把分支历史表(BHT)整合到六级流水线中。使用 sixstagebht 目标编译。 |

SixStageBonus.bsv | 一个空文件,在其中你可以改进前一个处理器以获得额外学分。使用 sixstagebonus 目标编译。 |

测试改进

在前一个实验中,使用命令 build -v <proc_name>(从 scemi/sim/ 目录运行)用于构建 bsim_dut 和 tb。在本实验中,此命令构建 <proc_name>_dut 而非 `

bsim_dut`,因此切换处理器类型时不会删除其他处理器构建。

模拟脚本现在要求您指定目标处理器:

./run_asm.sh <proc_name>

./run_bmarks.sh <proc_name>

模拟单个测试要求您运行正确的模拟可执行文件:

cp ../../programs/build/{assembly,benchmarks}/vmh/<test_name>.riscv.vmh mem.vmh

./<proc_name>_dut > out.txt &

./tb

两级流水线:TwoStage.bsv

TwoStage.bsv 包含一个两级流水线的 RISC-V 处理器。这个处理器与你在上一个实验中构建的处理器不同,因为它在第一阶段读取寄存器值,存在数据危害。

讨论问题 1(10 分): 调试实践!

如果你将 BTB 替换为简单的

pc + 4地址预测,处理器仍然可以工作,但性能不佳。如果你用一个非常糟糕的预测器替换它,该预测器预测每个pc的下一个指令是pc,它应该仍然可以工作,但性能会更差,因为每个指令都需要重定向(除非指令回到其自身)。如果你真的将预测设置为pc,你会在汇编测试中得到错误;第一个错误将来自于cache.riscv.vmh。

- 你得到的错误是什么?

- 处理器中发生了什么导致这种情况?

- 为什么你在 PC+4 和 BTB 预测器中没有得到这个错误?

- 你将如何修复它?

你实际上不必修复这个错误,只需回答问题。(提示:查看

ExecInst结构的addr字段。)

六级流水线:SixStage.bsv

六级流水线应该分为以下阶段:

- 指令取回 —— 从 iMem 请求指令并更新 PC

- 解码 —— 接收来自 iMem 的响应并解码指令

- 寄存器取值 —— 从寄存器文件读取

- 执行 —— 执行指令并在必要时重定向处理器

- 内存 —— 向 dMem 发送内存请求

- 写回 —— 接收来自 dMem 的内存响应(如果适用)并写入寄存器文件

应将 IMemory 和 DMemory 实例替换为 FPGAMemory 实例,以便在 FPGA 上实现。

练习 1(20 分):从

TwoStage.bsv中的两级实现开始,将每个内存替换为FPGAMemory并在SixStage.bsv中扩展为六级流水线。在模拟中,基准测试qsort可能需要更长时间(助教的桌面上为21秒,vlsifarm 机器上可能需要更长时间)。

注意,两级实现使用无冲突寄存器文件和记分牌。然而,你可以使用流水线或旁路版本的这些组件以获得更好的性能。同样,你可能想改变记分牌的大小。

讨论问题 2(5 分):你有什么证据表明所有流水线阶段可以在同一个周期内触发?

讨论问题 3(5 分):在你的六级流水线处理器中,纠正错误预测的指令需要多少个周期?

讨论问题 4(5 分):如果一条指令依赖于流水线中紧接其前的指令的结果,这条指令会延迟多少个周期?

讨论问题 5(5 分):你为每个基准测试获得了多少 IPC?

添加分支历史表:SixStageBHT.bsv

分支历史表(BHT)是一个跟踪分支历史的结构,用于方向预测。你的 BHT 应该使用从程序计数器(PC)中取得的参数化位数作为索引——通常是从第 n+1 位到第 2 位,因为第 1 和 0 位总是零。每个索引应该有一个两位的饱和计数器。不要在 BHT 中包含任何有效位或标签;我们不关心我们的预测中的别名问题。

练习 2(20 分):在

Bht.bsv中实现一个使用参数化位数作为表索引的分支历史表。

讨论问题 6(10 分):规划!

这个实验中最困难的事情之一是正确地训练和整合 BHT 到流水线中。在仍然看到不错的结果的情况下,可以犯很多错误。通过基于方向预测的基础知识制定一个好的计划,你将避免许多这些错误。

对于这个讨论问题,说明你将 BHT 整合到流水线中的计划。以下问题应该有助于指导你:

BHT 将被放置在流水线的哪个位置?

哪个流水线阶段执行对 BHT 的查找?

在哪个流水线阶段将使用 BHT 预测?

BHT 预测是否需要在流水线阶段之间传递?

如何使用 BHT 预测重定向 PC?

你需要添加新的 epoch 吗? >

如何处理重定向消息?

如果重定向,你需要改变当前指令及其数据结构吗?

你将如何训练 BHT?

哪个阶段产生 BHT 的训练数据?

哪个阶段将使用接口方法来训练 BHT?

如何发送训练数据?

你将为哪些指令训练 BHT?

你如何知道你的 BHT 是否有效?

练习 3(20 分):将 256 个条目(8 位索引)的 BHT 集成到

SixStage.bsv的六级流水线中,并将结果放入SixStageBHT.bsv中。

讨论问题 7(5 分):与

SixStage.bsv处理器相比,你在bpred_bht.riscv.vmh测试中看到了多少提升?

练习 4(10 分):将 JAL 指令的地址计算上移到解码阶段,并使用 BHT 重定向逻辑来重定向这些指令。

讨论问题 8(5 分):与

SixStage.bsv处理器相比,你在bpred_j.riscv.vmh和bpred_j_noloop.riscv.vmh测试中看到了多少提升?

讨论问题 9(5 分):你在每个基准测试中获得了多少 IPC?与原始六级流水线相比,这有多大提升?

讨论问题 10(选做):完成这个实验室你用了多长时间?

完成后记得使用 git push 将你的代码推送。

额外改进:SixStageBonus.bsv

这一节探讨了两种加速间接跳转到寄存器中存储的地址的方法(JALR)。

练习 5(10 附加分):JALR 指令在寄存器取值阶段已知目标地址。在寄存器取值阶段为 JALR 指令添加一个重定向路径,并将结果放入

SixStageBonus.bsv中。bpred_ras.riscv.vmh测试应该会有稍微更好的结果,有了这种改进。

大多数程序中找到的 JALR 指令被用作从函数调用返回。这意味着这种返回的目标地址是由先前的 JAL 或 JALR 指令写入返回地址寄存器 x1(也称为 ra)的,该指令启动了函数调用。

为了更好地预测 JALR 指令,我们可以在处理器中引入返回地址堆栈(RAS)。根据 RISC-V ISA,使用 rd=x0 和 rs1=x1 的 JALR 指令通常用作函数调用的返回指令。此外,使用 rd=x1 的 JAL 或 JALR 指令通常用作跳转以启动函数调用。因此,我们应该为带有 rd=x1 的 JAL/JALR 指令推送 RAS,并为带有 rd=x0 和 rs1=x1 的 JALR 指令弹出 RAS。

练习 6(10 附加分):实现一个返回地址堆栈,并将其集成到处理器的解码阶段(

SixStageBonus.bsv)。8 个元素的堆栈应该足够。如果堆栈满了,你可以简单地丢弃最旧的数据。bpred_ras.riscv.vmh测试应该会有更好的结果,有了这种改进。如果你在一个单独的 BSV 文件中实现了 RAS,请确保将其添加到 git 仓库中进行评分。

© 2016 麻省理工学院。保留所有权利。

实验 7: 带有 DRAM 和缓存的 RISC-V 处理器

实验 7截止日期: 2016年11月18日,美东时间晚上11:59:59。

你需要提交的实验 7内容包括:

- 在

WithoutCache.bsv和WithCache.bsv中完成练习 1、2 和 4 的答案- 在

discussion.txt中完成讨论问题 1 到 3 的答案

简介

现在,你已经拥有了一个具有分支目标和方向预测器(BTB 和 BHT)的六级流水线 RISC-V 处理器。不幸的是,你的处理器只能运行能够适应 256 KB FPGA块 RAM 的程序。这对于我们运行的小型基准程序(如250项快速排序)来说是足够的,但大多数有趣的应用程序都远大于 256 KB。幸运的是,我们使用的 FPGA 板配备了 1 GB DDR3 DRAM,可供 FPGA 访问。这非常适合存储大型程序,但由于 DRAM 读取延迟相对较长,这可能会影响性能。

本实验将重点使用 DRAM 而非块 RAM 作为主程序和数据存储来存储更大的程序,并添加缓存以减少长延迟 DRAM 读取对性能的影响。

首先,你将编写一个转换模块,将 CPU 内存请求转换为 DRAM 请求。此模块大大扩展了你的程序存储空间,但由于几乎每个周期都要从 DRAM 读取,你的程序将运行得更慢。接下来,你将实现一个缓存来减少需要从 DRAM 读取的次数,从而提高处理器性能。最后,你将为 FPGA 合成你的设计,并运行需要 DRAM 和长时间运行的非常大的基准测试。

测试基础设施的变化

如果我们每次运行一个新测试就必须重新配置 FPGA,那么运行所有的组装测试将需要很长时间(重新配置 FPGA 大约需要一分钟)。由于我们没有更改硬件,我们将只配置一次 FPGA,然后每次想要运行新测试时进行软重置。软件测试台(位于 scemi/Tb.cpp)将启动软重置,将 *.vmh 文件写入您的 FPGA 的 DRAM,并启动新测试。在每个测试开始之前,软件测试台还会打印出基准测试的名称,以帮助调试。在软件仿真(无 FPGA)中,我们还将模拟将 *.vmh 文件写入 DRAM 的过程,因此仿真时间也会比以前更长。

以下是使用名为 withoutcache 的处理器来仿真 simple.S 和 add.S 组装测试的示例命令:

cd scemi/sim

./withoutcache_dut > log.txt &

./tb ../../programs/build/assembly/vmh/simple.riscv.vmh ../../programs/build/assembly/vmh/add.riscv.vmh

这是样本输出:

---- ../../programs/build/assembly/vmh/simple.riscv.vmh ----

1196

103

通过

---- ../../programs/build/assembly/vmh/add.riscv.vmh ----

5635

427

通过

SceMi 服务线程完成!

我们还提供了两个脚本 run_asm.sh 和 run_bmarks.sh 来分别运行所有组装测试和基准测试。例如,我们可以使用以下命令测试处理器 withoutcache:

./run_asm.sh withoutcache

./run_bmarks.sh withoutcache

BSV 的标准输出将分别重定向到 asm.log 和 bmarks.log。

DRAM 接口

你将在本课程中使用的 VC707 FPGA 板配备了 1 GB DDR3 DRAM。DDR3 内存具有 64 位宽的数据总线,但每次传输都会发送八个 64 位块,因此实际上它的作用就像一个 512 位宽的内存。DDR3 内存具有高吞吐量,但其读取延迟也比较高。

Sce-Mi 接口为我们生成了 DDR3 控制器,我们可以通过 MemoryClient 接口连接到它。本实验中为你提供的 typedef 使用了 BSV 的内存包中的类型(见 BSV 参考指南或 $BLUESPECDIR/BSVSource/Misc/Memory.bsv 的源代码)。以下是 src/includes/MemTypes.bsv 中与 DDR3 内存相关的一些 typedef:

typedef 24 DDR3AddrSize;

typedef Bit#(DDR3AddrSize) DDR3Addr;

typedef 512 DDR3DataSize;

typedef Bit#(DDR3DataSize) DDR3Data;

typedef TDiv#(DDR3DataSize, 8) DDR3DataBytes;

typedef Bit#(DDR3DataBytes) DDR3ByteEn;

typedef TDiv#(DDR3DataSize, DataSize) DDR3DataWords;

// 下面的 typedef 等同于:

// typedef struct {

// Bool write;

// Bit#(64) byteen;

// Bit#(24) address;

// Bit#(512) data;

// } DDR3_Req deriving (Bits, Eq);

typedef MemoryRequest#(DDR3AddrSize, DDR3DataSize) DDR3_Req;

// 下面的 typedef 等同于:

// typedef struct {

// Bit#(512) data;

// } DDR3_Resp deriving (Bits, Eq);

typedef MemoryResponse#(DDR3DataSize) DDR3_Resp;

// 下面的 typedef 等同于:

// interface DDR3_Client;

// interface Get#( DDR3_Req ) request;

// interface Put#( DDR3_Resp ) response;

// endinterface;

typedef MemoryClient#(DDR3AddrSize, DDR3DataSize) DDR3_Client;

DDR3_Req

对 DDR3 的读取和写入请求与对 FPGAMemory 的请求不同。最大的区别是字节启用信号 byteen。

write—— 布尔值,指定此请求是写入请求还是读取请求。byteen—— 字节启用,指定将写入哪些 8 位字节。此字段对于读取请求无效。如果你想写入所有 16 字节(即 512 位),你将需要将此设置为全部为 1。你可以使用文字'1(注意单引号)或maxBound来实现。address—— 读取或写入请求的地址。DDR3 内存以 512 位块为单位进行寻址,因此地址 0 指的是第一个 512 位块,地址 1 指的是第二个 512 位块。这与 RISC-V 处理器使用的字节寻址非常不同。data—— 用于写入请求的数据值。

DDR3_Resp

DDR3 内存只对读取发送响应,就像 FPGAMemory 一样。内存响应类型是一种结构体——因此,你将不会直接接收到 Bit#(512) 值,而必须访问响应中的 data 字段以获取 Bit#(512) 值。

DDR3_Client

DDR3_Client 接口由一个 Get 子接口和一个 Put 子接口组成。这个接口由处理器公开,Sce-Mi 基础设施将其连接到 DDR3 控制器。你无需担心构建此接口,因为示例代码中已为你完成。

示例代码

这里是一些展示如何构建 FIFOs 以及 DDR3 内存接口初始化接口的示例代码,

此示例代码提供在 src/DDR3Example.bsv 中。

import GetPut::*;

import ClientServer::*;

import Memory::*;

import CacheTypes::*;

import WideMemInit::*;

import MemUtil::*;

import Vector::*;

// 其他包和类型定义

(* synthesize *)

module mkProc(Proc);

Ehr#(2, Addr) pcReg <- mkEhr(?);

CsrFile csrf <- mkCsrFile;

// 其他处理器状态和组件

// 接口 FIFO 到真实的 DDR3

Fifo#(2, DDR3_Req) ddr3ReqFifo <- mkCFFifo;

Fifo#(2, DDR3_Resp) ddr3RespFifo <- mkCFFifo;

// 初始化 DDR3 的模块

WideMemInitIfc ddr3InitIfc <- mkWideMemInitDDR3( ddr3ReqFifo );

Bool memReady = ddr3InitIfc.done;

// 将 DDR3 包装成 WideMem 接口

WideMem wideMemWrapper <- mkWideMemFromDDR3( ddr3ReqFifo, ddr3RespFifo );

// 将 WideMem 接口分割为两个(多路复用方式使用)

// 这个分割器只在重置后生效(即 memReady && csrf.started)

// 否则 guard 可能失败,我们将获取到垃圾 DDR3 响应

Vector#(2, WideMem) wideMems <- mkSplitWideMem( memReady && csrf.started, wideMemWrapper );

// 指令缓存应使用 wideMems[1]

// 数据缓存应使用 wideMems[0]

// 在软重置期间,一些垃圾可能进入 ddr3RespFifo

// 这条规则将排空所有此类垃圾

rule drainMemResponses( !csrf.started );

ddr3RespFifo.deq;

endrule

// 其他规则

method ActionValue#(CpuToHostData) cpuToHost if(csrf.started);

let ret <- csrf.cpuToHost;

return ret;

endmethod

// 将 ddr3RespFifo empty 添加到 guard 中,确保垃圾已被排空

method Action hostToCpu(Bit#(32) startpc) if ( !csrf.started && memReady && !ddr3RespFifo.notEmpty );

csrf.start(0); // 只有 1 个核心,id = 0

pcReg[0] <= startpc;

endmethod

// 为测试台提供 DDR3 初始化的接口

interface WideMemInitIfc memInit = ddr3InitIfc;

// 接口到真实 DDR3 控制器

interface DDR3_Client ddr3client = toGPClient( ddr3ReqFifo, ddr3RespFifo );

endmodule

在上述示例代码中,ddr3ReqFifo 和 ddr3RespFifo 作为与真实 DDR3 DRAM 的接口。在仿真中,我们提供了一个名为 mkSimMem 的模块来模拟 DRAM,该模块在 scemi/SceMiLayer.bsv 中实例化。在 FPGA 合成中,DDR3 控制器在顶层模块 mkBridge 中实例化,位于 $BLUESPECDIR/board_support/bluenoc/bridges/Bridge_VIRTEX7_VC707_DDR3.bsv。还有一些胶合逻辑在 scemi/SceMiLayer.bsv 中。

在示例代码中,我们使用模块 mkWideMemFromDDR3 将 DDR3_Req 和 DDR3_Resp 类型转换为更友好的 WideMem 接口,该接口定义在 src/includes/CacheTypes.bsv 中。

共享 DRAM 接口

示例代码中仅暴露了单一的与 DRAM 的接口,但你有两个模块将使用它:指令缓存和数据缓存。如果它们都向 ddr3ReqFifo 发送请求,并且都从 ddr3RespFifo 获取响应,那么它们的响应可能会混淆。为了处理这个问题,你需要一个单独的 FIFO 来跟踪响应应当返回的顺序。每个加载请求都与一个入队到排序 FIFO 的操作配对,该操作指定谁应该获取响应。

为了简化这个过程,我们提供了模块 mkSplitWideMem 来将 DDR3 FIFOs 分割为两个 WideMem 接口。这个模块定义在 src/includes/MemUtils.bsv 中。为了防止 mkSplitWideMem 过早采取行动并显示出预期之外的行为,我们将其第一个参数设置为 memReady && csrf.started,以在处理器启动之前冻结它。这也可以避免与 DRAM 内容初始化发生调度冲突。

处理软重置问题

如前所述,你将在启动每个新测试前对处理器状态进行软重置。在软重置期间,由于某些跨时钟域问题,一些垃圾数据可能会入队到 ddr3RespFifo 中。为了处理这个问题,我们添加了 drainMemResponses 规则来排空垃圾数据,并在 hostToCpu 方法的保护条件中添加了检查 drainMemResponses 是否为空的条件。

建议:在每个管道阶段的规则中添加

csrf.started到 guard 中。这可以防止在处理器启动之前 DRAM 被访问。

从前一个实验室迁移代码

本实验室提供的代码非常相似,但存在一些差异需要注意。大多数差异都在提供的示例代码 src/DDR3Example.bsv 中显示。

修改的 Proc 接口

Proc 接口现在只有单一的内存初始化接口,以匹配统一的 DDR3 内存。此内存初始化接口的宽度已扩展到每次传输 512 位。这个新的初始化接口的类型是 WideMemInitIfc,在 src/includes/WideMemInit.bsv 中实现。

空文件

本实验室的两个处理器实现:src/WithoutCache.bsv 和 src/WithCache.bsv 最初是空的。你应该将代码从 SixStageBHT.bsv 或 SixStageBonus.bsv 复制过来作为这些处理器的起点。src/includes/Bht.bsv 也是空的,因此你还需要将前一个实验室的代码复制过来。

新文件

以下是在 src/includes 文件夹下提供的新文件概述:

| 文件名 | 描述 |

|---|---|

Cache.bsv | 一个空文件,你将在本实验室中实现缓存模块。 |

CacheTypes.bsv | 关于缓存的类型和接口定义的集合。 |

MemUtil.bsv | 关于 DDR3 和 WideMem 的有用模块和函数的集合。 |

SimMem.bsv | 在仿真中使用的 DDR3 内存。它有 10 个周期的流水线访问延迟,但额外的胶合逻辑可能会增加访问 DRAM 的总延迟。 |

WideMemInit.bsv | DDR3 初始化模块。 |

MemTypes.bsv 也有一些变化。

使用 DRAM 而不使用缓存的处理器 WithoutCache.bsv

练习 1 (10 分):在

Cache.bsv中实现一个名为mkTranslator的模块,它接受与 DDR3 内存相关的某些接口(例如WideMem),并返回一个Cache接口(见CacheTypes.bsv)。该模块不应进行任何缓存,只需从

MemReq到 DDR3 请求(如果使用WideMem接口,则为WideMemReq)以及从 DDR3 响应(如果使用WideMem接口,则为CacheLine)到MemResp的转换。这将需要一些内部存储来跟踪你从主存返回的缓存行中需要哪个字。将mkTranslator集成到文件WithoutCache.bsv中的六阶段管线中(即你不应再使用mkFPGAMemory)。你可以通过在scemi/sim/目录下运行以下命令来构建这个处理器:$ build -v withoutcache并通过运行以下命令来测试此处理器:

$ ./run_asm.sh withoutcache和

$ ./run_bmarks.sh withoutcache在

scemi/sim/目录下。

讨论问题 1 (5 分):记录

./run_bmarks.sh withoutcache的结果。你在每个基准测试中看到的 IPC 是多少?

使用带有缓存的 DRAM 的处理器 WithCache.bsv

通过使用模拟的 DRAM 运行基准测试,你应该已经注意到你的处理器速度大大减慢了。通过记住之前的 DRAM 加载到缓存中,你可以重新提升处理器的速度,正如课堂上所描述的那样。

练习 2 (20 分):实现一个名为

mkCache的模块作为直接映射缓存,仅在替换缓存行时写回,并且仅在写缺失时分配。该模块应接受一个

WideMem接口(或类似的东西)并暴露一个Cache接口。使用CacheTypes.bsv中的typedefs来定义你的缓存大小和Cache接口。你可以使用寄存器向量或寄存器文件来实现缓存中的数组,但寄存器向量更容易指定初始值。将此缓存集成到WithoutCache.bsv中的相同管线,并将其保存在WithCache.bsv中。你可以通过在scemi/sim/目录下运行以下命令来构建此处理器:$ build -v withcache并通过运行以下命令来测试此处理器:

$ ./run_asm.sh withcache和

$ ./run_bmarks.sh withcache在

scemi/sim/目录下。

讨论问题 2 (5 分):记录

./run_bmarks.sh withcache的结果。你在每个基准测试中看到的 IPC 是多少?

运行大型程序

通过添加对 DDR3 内存的支持,你的处理器现在可以运行比我们一直在使用的小基准测试更大的程序。不幸的是,这些大型程序需要更长的运行时间,在许多情况下,模拟完成需要太长时间。现在是尝试 FPGA 合成的好时机。通过在 FPGA 上实现你的处理器,由于设计在硬件而非软件中运行,你将能够更快地运行这些大型程序。

练习 3 (0 分,但你仍然应该做这个): 在为 FPGA 合成之前,让我们试试看一个在模拟中运行时间很长的程序。程序

./run_mandelbrot.sh运行一个基准测试,使用 1 和 0 打印曼德博集合的方形图像。运行此基准测试以查看它在实时中的运行速度有多慢。请不要等待此基准测试完成,可以使用 Ctrl-C 提前终止。

为 FPGA 合成

你可以通过进入 scemi/fpga_vc707 文件夹并执行以下命令开始为 WithCache.bsv 进行 FPGA 合成:

vivado_setup build -v

这个命令将需要很长时间(大约一小时)并消耗大量计算资源。你可能想选择一个负载较轻的 vlsifarm 服务器。你可以使用 w 查看有多少人登录,并可以使用 top 或 uptime 查看正在使用的资源。

一旦完成,你可以通过运行 ./submit_bitfile 命令将你的 FPGA 设计提交给共享的 FPGA 板进行测试,并可以使用 ./get_results 检查结果。get_results 脚本将在你的结果准备好之前持续显示当前的 FPGA 状态。在 FPGA 上执行可能需要几分钟时间,如果其他学生也提交了作业,则可能需要更长时间。FPGA 上的 *.vmh 程序文件位于 /mit/6.175/fpga-programs。它包括在模拟中使用的所有程序,以及具有较大输入的基准程序(在 large 子目录中)。你还可以通过在 programs/benchmarks 文件夹中执行 make -f Makefile.large 生成大型基准的 *.vmh 文件。然而,这些 *.vmh 文件在软件中模拟将需要很长时间。

如果你想检查 FPGA 的状态,可以运行 ./fpga_status 命令。

练习 4 (10 分):为 FPGA 合成

WithCache.bsv并将你的设计发送到共享的 FPGA 执行。获取正常和大型基准的结果并将它们添加到discussion.txt。

讨论问题 3 (10 分):曼德博程序在你的处理器中需要多少周期来执行?当前的 FPGA 设计有效时钟速度为 50 MHz。曼德博程序以秒为单位执行需要多长时间?估计你在硬件与模拟中看到的速度提升,通过估计在模拟中运行

./run_mandelbrot.sh将需要多长时间(以墙钟时间为单位)。

讨论问题 4 (可选):完成这个实验室花了你多长时间?

完成后,请提交你的代码并执行 git push。

来自你友好的助教的提示: 如果你在 FPGA 测试中遇到任何问题,请尽快通过电子邮件通知我。基础设施并不非常稳定,但及早通知我有关任何问题将使它们更快得到解决。

值得关注的内容:(添加于 11 月 17 日) 让我们分

析一些 FPGA 合成的结果。

>

>查看 scemi/fpga_vc707/xilinx/mkBridge/mkBridge.runs/synth_1/runme.log,搜索“Report Instance Areas”。此报告显示了你的设计使用的单元数量的分解。scemi_dut_dut_dutIfc_m_dut 使用了多少个单元?总共有多少个单元?(查看 top)。

>

>看看 scemi/fpga_vc707/xilinx/mkBridge/mkBridge.runs/impl_1/mkBridge_utilization_placed.rpt。这包含了你的设计对 FPGA 资源的使用报告(这些资源被组织为“切片”,与单元不同)。在“1. 切片逻辑”下,你可以看到你的整个设计(包括存储器控制器和 Sce-Mi 接口)使用了多少切片。现在看看 scemi/fpga_vc707/xilinx/mkBridge/mkBridge.runs/impl_1/mkBridge_timing_summary_routed.rpt。这里有一些时序信息,最重要的是,你的 CPU 中最长的组合路径的延迟。在标记为“Max Delay Paths”的部分中查找“scemi_dut_dut_dutIfc_m_dut/[signal]” 的出现。"Slack" 是“所需时间”(本质上是时钟周期)与“到达时间”(你的信号传播通过设计的这部分所需时间)之间的差异。你在路径中看到了什么(看“Netlist Resource(s)”列)?为什么我们可能在最大延迟路径中看到 EHR(即关键路径)?(见顶部)

© 2016 麻省理工学院. 版权所有。

实验 8: 具有异常处理的 RISC-V 处理器

实验8截止日期:11月25日星期五晚上11:59:59 EST。

你在实验8中的(极少量的)交付物包括:

- 在

ExcepProc.bsv中完成的练习1的答案- 在

discussion.txt中完成的讨论问题1的答案

引言

在本实验中,你将为一个单周期RISC-V处理器添加异常处理功能。有了异常支持,我们将能够做到以下两件事:

- 实现

printInt()、printChar()和printStr()函数作为系统调用。 - 在软件异常处理程序中模拟不支持的乘法指令(

mul)。

我们使用单周期处理器,这样你可以专注于异常处理的工作方式,而不需要考虑流水线带来的复杂性。

你已经得到了所有必需的程序来测试你的处理器。你只需要添加硬件支持来运行异常。以下部分涵盖了处理器中发生了哪些变化以及你需要做什么。

控制状态寄存器(CSRs)

src/includes/CsrFile.bsv中的mkCsrFile模块已经扩展了一些新的CSRs,用于实现异常处理。

以下是mkCsrFile模块中新增CSRs的总结。你的软件可以使用csrr、csrw和csrrw指令操作这些CSRs。

| 控制寄存器名称 | 描述 |

|---|---|

mstatus | 该寄存器的低12位存储了一个包含特权/用户模式(PRV)和中断使能(IE)位的4元素栈。每个栈元素宽3位。例如,mstatus[2:0]对应于栈顶,包含当前的PRV和IE位。具体来说,mstatus[0]是IE位,如果IE=1,则中断被使能。mstatus[2:1]包含PRV位。如果处理器处于用户模式,则应设置为2'b00;如果处理器处于机器(特权)模式,则应设置为2'b11。其他栈元素(例如mstatus[5:3], ..., mstatus[11:9])具有相同的构造。当发生异常时,栈将通过左移3位“推”;结果,新的PRV和IE位(例如机器模式和中断禁用)现在存储在mstatus[2:0]中。相反,当我们使用eret指令从异常中返回时,栈通过右移3位“弹出”。mstatus[2:0]将包含它们原来的值,mstatus[11:9]被分配给(用户模式,中断使能)。 |

mcause | 当异常发生时,原因存储在mcause中。ProcTypes.bsv包含了我们将在本实验中实现的两个异常原因值:excepUnsupport:不支持的指令异常。excepUserECall:系统调用。 |

mepc | 当异常发生时,导致异常的指令的PC存储在mepc中。 |

mscratch | 它存储了一个“安全”数据段的指针,可以在发生异常时用来存储所有通用目的寄存器(GPR)的值。这个寄存器在本实验中完全由软件操作。 |

mtvec | 陷阱向量(trap vector) 是一个只读寄存器,它存储异常处理程序的起始地址。当发生异常时,处理器应将PC设置为mtvec。 |

mkCsrFile

模块还包含了一些额外的接口方法,应该是不言自明的。

解码逻辑

解码逻辑也已扩展以支持异常。以下三个新指令的功能总结如下:

| 指令 | 描述 |

|---|---|

eret | 这条指令用于从异常处理中返回。它被解码为新的iType为ERet,其他一切都无效且不被执行。 |

ecall(或scall) | 这条指令是系统调用指令。它被解码为新的iType为ECall,其他一切都无效且不被执行。 |

csrrw rd, csr, rs1 | 这条指令将csr的值写入rd,并将rs1的值写入csr。也就是说,它执行rd <- csr; csr <- rs1。rd和rs1都是GPR,而csr是CSR。这条指令取代了我们之前使用的csrw指令,因为csrw只是csrrw的一个特例。这条指令被解码为新的iType为Csrrw。由于csrrw将写入两个寄存器,ProcTypes.bsv中的ExecInst类型增加了一个新字段“Data csrData”,其中包含要写入csr的数据。 |

eret和csrrw指令仅在机器(特权)模式下允许。为了检测在用户模式下非法使用这些指令,Decode.bsv中的decode函数接受第二个参数“Bool inUserMode”。如果处理器处于用户模式,则该参数应设置为True。如果解码函数检测到在用户模式下非法使用eret和csrrw指令,则指令的iType将被设置为新的值NoPermission,处理器稍后将报告此错误。

处理器

我们已经提供了大部分处理器代码在ExcepProc.bsv中,你只需要填写四个标有“TODO”注释的地方:

- 为

decode函数添加第二个参数。 - 处理“不支持的指令”异常:设置

mepc和mcause,将新的PRV和IE位推入mstatus的栈中,并更改PC到mtvec。你可能需要使用mkCsrFile的startExcep方法。 - 处理系统调用:系统调用可以像不支持的指令异常一样处理。

- 处理

eret指令:弹出mstatus的栈并更改PC到mepc。你可能需要使用mkCsrFile的eret方法。

测试程序

测试程序可以分为三类:我们之前见过的汇编测试和基准测试,以及一组新的测试处理器异常处理功能的程序。

旧程序

汇编测试和基准测试在机器模式下运行(这些被称为“裸机运行”),不会触发异常。它们可以通过进入programs/assembly和programs/benchmarks文件夹并运行make来编译。

新程序

第三类程序涉及异常。这些程序从机器模式开始,但立即降至用户模式。所有打印函数都实现为系统调用,不支持的乘法指令(mul)可以在软件异常处理程序中模拟。这些程序的源代码也位于programs/benchmarks文件夹下,但它们链接到programs/benchmarks/excep_common文件夹中的库(而不是programs/benchmarks/common)。

编译这些程序,你可以使用以下

命令:

cd programs/benchmarks

make -f Makefile.excep

编译结果将出现在programs/build/excep文件夹中。(如果你忘记了,你会收到一个错误消息,如"ERROR: ../../programs/build/excep/vmh/median.riscv.vmh does not exit [sic], you need to first compile"。)

这些程序不仅包括我们之前看到的原始基准测试,还包括两个新程序:

mul_inst:这是原始multiply基准的一个替代版本,直接使用mul指令。permission:这个程序在用户模式下执行csrrw指令,并应该失败!

实现异常

练习1(40分):如上所述,在

ExcepProc.bsv中的处理器上实现异常。你可以通过运行build -v excep在

scemi/sim中构建处理器。我们提供了以下脚本在仿真中运行测试程序:

run_asm.sh:在机器模式下运行汇编测试(无异常)。run_bmarks.sh:在机器模式下运行基准测试(无异常)。run_excep.sh:在用户模式下运行基准测试(有异常)。run_permit.sh:在用户模式下运行permission程序。你的处理器应该通过前三个脚本(

run_asm.sh、run_bmarks.sh和run_excep.sh)中的所有测试,但应该在最后一个脚本(run_permit.sh)中报告错误并终止。注意,在运行run_permit.sh时看到bsim_dut输出的错误消息后,软件测试台tb仍在运行,因此你需要按Ctrl-C来终止它。

讨论问题1(10分):在即将到来的感恩节假期的精神中,列举一些你感激只需在单周期处理器上做这个实验的理由。为了帮助你开始:如果你在处理流水线实现,异常会引入哪些新的危险?

讨论问题2(可选):你完成这个实验花了多长时间?

完成后记得提交你的代码并git push。

© 2016 麻省理工学院。版权所有。

项目1: 存储队列

第一部分的项目没有明确的截止日期。s

然而,整个项目将在12月14日,星期三下午3点EST举行的项目展示时到期。

在最终项目的第一部分,我们将在实验7中设计的阻塞数据缓存(D$)中添加存储队列。

克隆项目代码

由于这是一个双人完成的项目,你需要首先联系我并提供你们小组成员的用户名。使用以下命令克隆你的Git仓库,其中${PERSON1}和${PERSON2}是你们的Athena用户名,并且${PERSON1}在字母顺序上排在${PERSON2}之前:

$ git clone /mit/6.175/groups/${PERSON1}_${PERSON2}/project-part-1.git project-part-1

改进阻塞缓存

只有对数据缓存实现存储队列才有意义,但我们希望保持指令缓存(I$)的设计与实验7中的相同。因此,我们需要分离数据缓存和指令缓存的设计。src/includes/CacheTypes.bsv包含了新的缓存接口,尽管它们看起来是相同的:

interface ICache;

method Action req(Addr a);

method ActionValue#(MemResp) resp;

endinterface

interface DCache;

method Action req(MemReq r);

method ActionValue#(MemResp) resp;

endinterface

你将在ICache.bsv中实现你的I$,在DCache.bsv中实现你的D$。

实验7缓存设计的缺陷

在实验7中,缓存的req方法会检查标签数组,判断访问是缓存命中还是未命中,并执行处理两种情况所需的动作。然而,如果你查看实验7的编译输出,你会发现处理器的内存阶段规则与D$中替换缓存行、发送内存请求和接收内存响应的几条规则冲突。这些冲突是因为编译器无法准确判断当它们在你的处理器调用的req方法中被操作时,缓存的数据数组、标签数组和状态寄存器何时会被更新。

编译器还将内存阶段规则视为“更紧急”的,所以当内存阶段触发时,D$的规则不能在同一周期内触发。这种冲突不会影响缓存设计的正确性,但可能会损害性能。

解决规则冲突

为了消除这些冲突,我们在D$中添加了一个名为reqQ的单元素旁路FIFO。所有来自处理器的请求首先进入reqQ,在D$中得到处理后出队。更具体地说,req方法只是将传入的请求入队到reqQ中,我们将创建一个新规则,例如doReq,来完成原本在req方法中完成的工作(即从reqQ出队请求以便在没有其他请求的情况下进行处理)。

doReq规则的显式防护将使其与D$中的其他规则互斥,并消除这些冲突。由于reqQ是一个旁路FIFO,D$的命中延迟仍然是一个周期。

**练习1(10分):**将改进的D$(带旁路FIFO)集成到处理器中。以下是你需要做的简要概述:

从实验7复制

Bht.bsv到src/includes/Bht.bsv。在`src/

Proc.bsv中完成处理器流水线。你可以用你在实验7中的WithCache.bsv中编写的代码来完成部分完成的代码。 > >3. 在src/includes/ICache.bsv中实现I$。你可以直接使用实验7中的缓存设计。 > >4. 在src/includes/DCache.bsv的mkDCache模块中实现改进的D$设计。 > >5. 在scemi/sim`文件夹下运行以下命令构建处理器:

>

$ build -v cache这一次,你不应该看到与

mkProc内部规则冲突相关的任何警告。

- 在

scemi/sim文件夹下运行以下命令测试处理器:$ ./run_asm.sh cache和

$ ./run_bmarks.sh cachebluesim的标准输出将被重定向到

scemi/sim/logs文件夹下的日志文件。对于新的汇编测试cache_conflict.S,IPC应该在0.9左右。如果你得到的IPC远低于0.9,那么你的代码中可能有错误。

**讨论问题1(5分):**即使每次循环迭代都有一个存储未命中,解释为什么汇编测试

cache_conflict.S的IPC这么高。源代码位于programs/assembly/src。

添加存储队列

现在,我们将向D$添加存储队列。

存储队列模块接口

我们在src/includes/StQ.bsv中提供了一个参数化的n条目存储队列的实现。每个存储队列条目的类型就是MemReq类型,接口是:

typedef MemReq StQEntry;

interface StQ#(numeric type n);

method Action enq(StQEntry e);

method Action deq;

method ActionValue#(StQEntry) issue;

method Maybe#(Data) search(Addr a);

method Bool notEmpty;

method Bool notFull;

method Bool isIssued;

endinterface

存储队列与无冲突FIFO非常相似,但它具有一些独特的接口方法。

issue方法:返回存储队列中最旧的条目(即FIFO.first),并在存储队列内设置一个状态位。后续对issue方法的调用将被阻塞,直到此状态位被清除。deq方法:从存储队列中移除最旧的条目,并清除由issue方法设置的状态位。search(Addr a)方法:返回存储队列中地址字段等于方法参数a的最年轻条目的数据字段。如果存储队列中没有写入地址a的条目,则该方法将返回Invalid。

你可以查看此模块的实现以更好地理解每个接口方法的行为。

插入到存储队列

设stq表示在D$内实例化的存储队列。如课堂上所述,来自处理器的存储请求应放入stq。由于我们在D$中引入了旁路FIFOreqQ,我们应该在从reqQ出队后将存储请求入队到stq。注意,存储请求不能在D$的req方法中直接入队到stq,因为这可能导致加载绕过较年轻存储的值。换句话说,所有来自处理器的请求仍然首先入队到reqQ。

还应该注意的是,将存储放入stq可以与几乎所有其他操作(如处理未命中)并行进行,因为存储队列的`

enq`方法被设计为与其他方法无冲突。

从存储队列发出

如果缓存当前没有处理任何请求,我们可以处理存储队列中最旧的条目或reqQ.first中的传入加载请求。来自处理器的加载请求应优先于存储队列。也就是说,如果stq有有效条目但reqQ.first有加载请求,那么我们处理加载请求。否则,我们调用stq的issue方法来获取最旧的存储以进行处理。

注意,当存储提交(即将数据写入缓存)时,才从存储队列中出队存储,而不是在处理开始时。这使我们能够实现一些稍后(但不在本节中)将实现的优化。issue和dequeue方法被设计为可以在同一规则中调用,以便我们在存储在缓存中命中时可以同时调用这两个方法。

还应该注意的是,当reqQ.first是存储请求时,不应阻塞从存储队列发出的存储。否则,缓存可能会死锁。

**练习2(20分):**在

src/includes/DCache.bsv的mkDCacheStQ模块中实现带存储队列的阻塞D$。你应该使用CacheTypes.bsv中已定义的数值类型StQSize作为存储队列的大小。你可以通过在scemi/sim文件夹下运行以下命令来构建处理器:$ build -v stq并通过运行以下命令来测试它:

$ ./run_asm.sh stq和

$ ./run_bmarks.sh stq

为了避免由于编译器调度努力不足导致的冲突,我们建议将doReq规则分为两个规则:一个用于存储,另一个用于加载。

对于新的汇编测试stq.S,由于存储未命中的延迟几乎完全被存储队列隐藏,IPC应该在0.9以上。然而,你可能不会看到基准程序的任何性能改善。

在存储未命中下加载命中

尽管存储队列显著提高了汇编测试stq.S的性能,但它对基准程序没有任何影响。为了理解我们的缓存设计的局限性,让我们考虑一个情况:一个存储指令后跟一个加法指令,然后是一个加载指令。在这种情况下,存储将在缓存中开始处理,然后才发送加载请求到缓存。如果存储发生缓存未命中,即使加载可能在缓存中命中,加载也会被阻塞。也就是说,存储队列未能隐藏存储未命中的延迟。

为了在不过度复杂设计的情况下获得更好的性能,我们可以允许在存储未命中的同时发生加载命中。具体来说,假设reqQ.first是一个加载请求。如果缓存没有处理其他请求,我们当然可以处理reqQ.first。然而,如果存储请求正在等待尚未到达的来自内存的响应,我们可以尝试处理加载请求,检查它是否在存储队列或缓存中命中。如果加载在存储队列或缓存中命中,我们可以从reqQ中出队它,从存储队列转发数据或从缓存读取数据,并将加载的值返回给处理器。如果加载是未命中,我们不

采取进一步行动,只需将其保留在reqQ中。

注意,允许加载命中时没有结构冒险,因为待处理的存储未命中不访问缓存或其状态。我们还应注意,加载命中不能与加载未命中同时发生,因为我们不希望加载响应乱序到达。

为方便起见,我们在CacheTypes.bsv中定义的WideMem接口中添加了一个名为respValid的额外方法。当WideMem有响应可用时(即等于WideMem的resp方法的防护),此方法将返回True。

**练习3(10分):**在

src/includes/DCache.bsv的mkDCacheLHUSM模块中实现允许在存储未命中下加载命中的带存储队列的阻塞D$。你可以通过在scemi/sim文件夹下运行以下命令来构建处理器:$ build -v lhusm并通过运行以下命令来测试它:

$ ./run_asm.sh lhusm和

$ ./run_bmarks.sh lhusm你应该能看到一些基准程序性能的提升。

**讨论问题2(5分):**在未优化的汇编代码中,程序可能只是为了在下一条指令中读取而写入内存:

sw x1, 0(x2) lw x3, 0(x2) add x4, x3, x3这经常发生在程序将其参数保存到栈上的子程序中。优化编译器(例如GCC)可以将寄存器的值保持在寄存器中以加快对这些数据的访问,而不是将寄存器的值写出到内存。这种优化编译器的行为如何影响你刚刚设计的内容?存储队列是否仍然重要?

**讨论问题3(5分):**与练习1和2中的缓存设计相比,你在每个基准的性能上看到了多少改进?

© 2016 麻省理工学院。版权所有。

项目2: 缓存一致性

这部分以及项目的第一部分将在12月14日,星期三下午3点EST举行的项目展示中到期。

概述

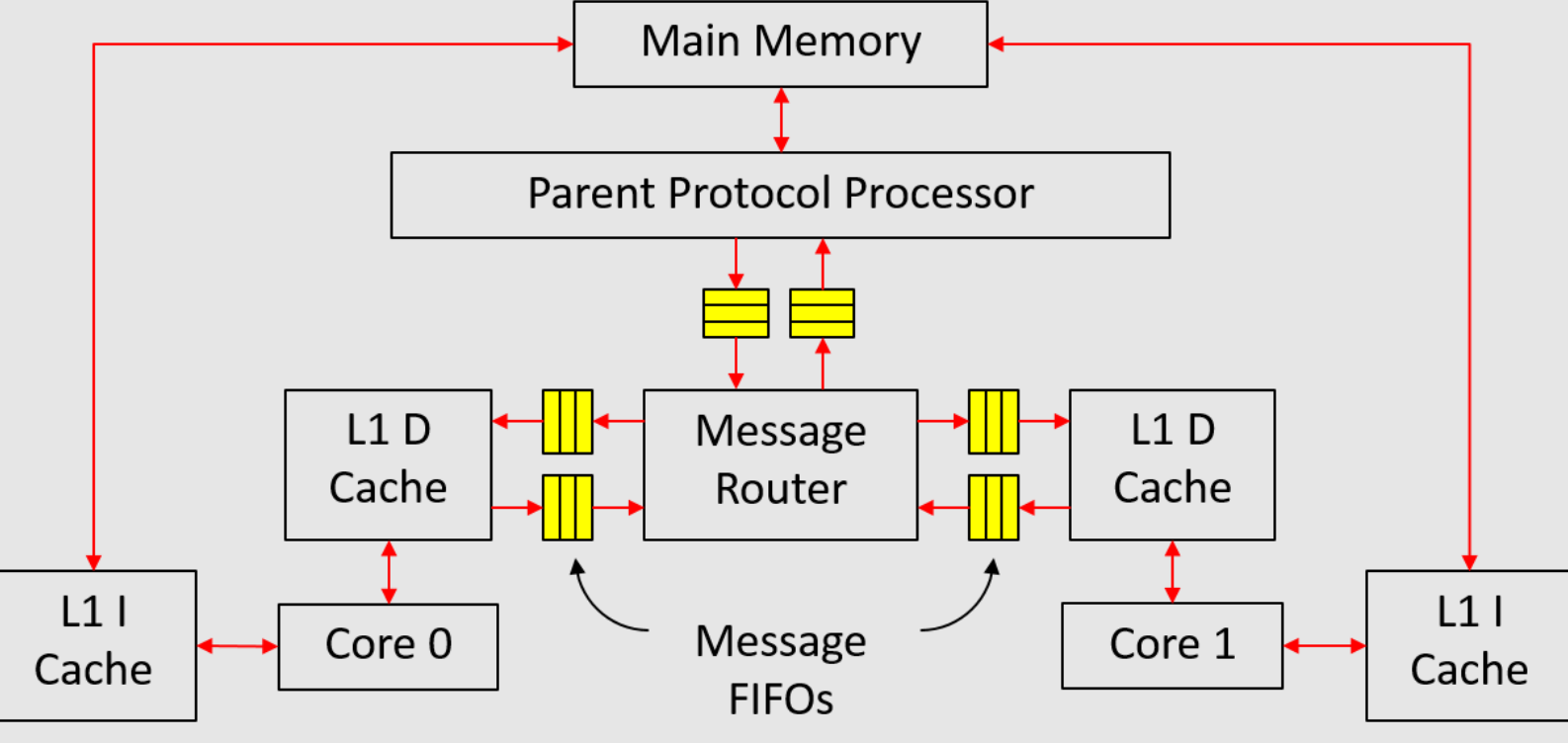

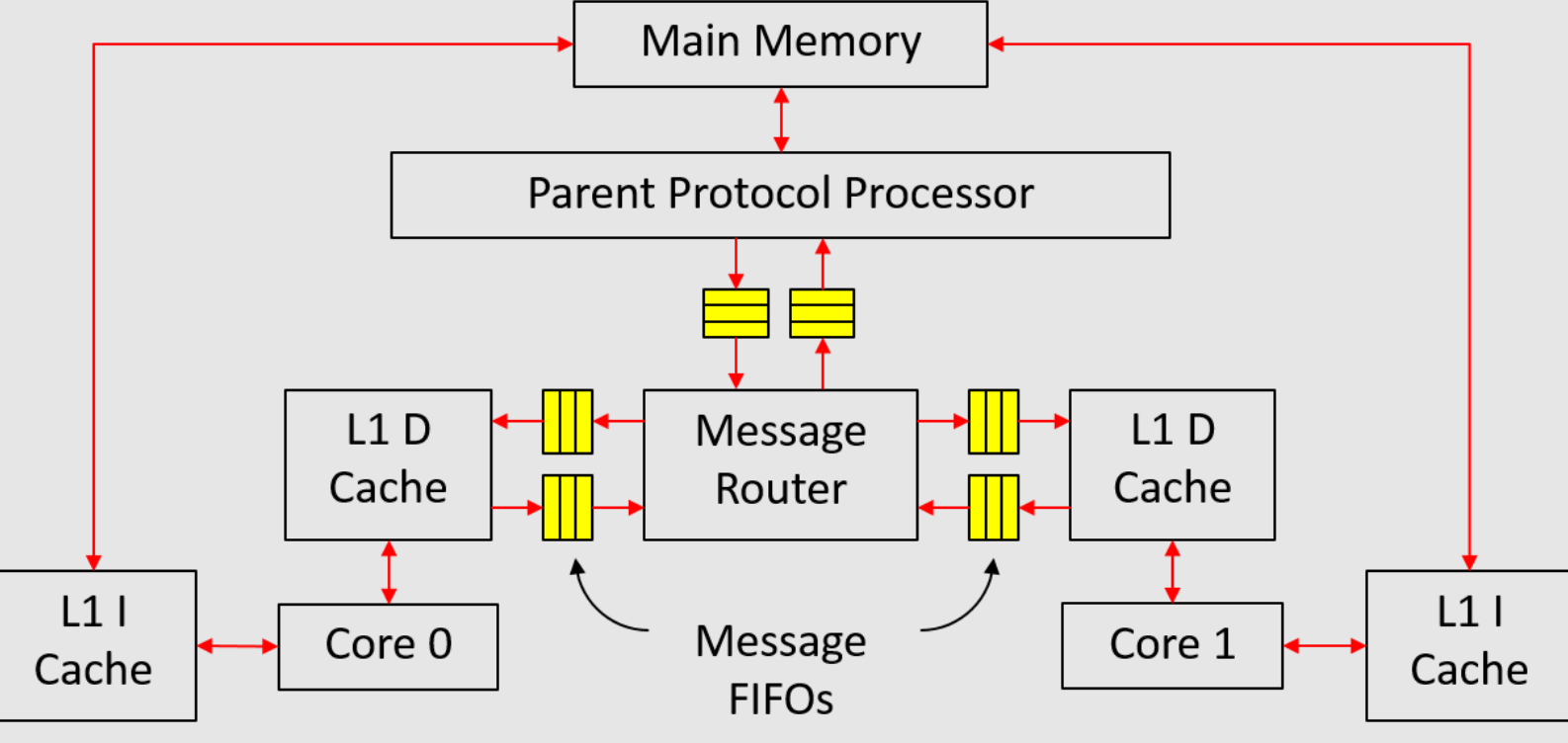

在本项目部分,我们将在仿真中实现一个多核系统,如图1所示。该系统由两个核心组成,每个核心都有自己的私有缓存。数据缓存(D缓存)和主内存通过课堂上介绍的MSI协议保持一致。由于我们没有自修改程序,指令缓存(I缓存)可以直接访问内存,无需经过任何一致性事务。

|

|---|

| 图1:多核系统 |

由于该系统相当复杂,我们尝试将实现分为多个小步骤,并为每个步骤提供了测试台。但是,通过测试台并不意味着实现是100%正确的。

实现存储层次结构的单元

消息FIFO

消息FIFO传输请求和响应消息。对于从子级到父级的消息FIFO,它传输升级请求和降级响应。对于从父级到子级的消息FIFO,它传输降级请求和升级响应。

消息FIFO传输的消息类型在src/includes/CacheTypes.bsv中定义如下:

#![allow(unused)] fn main() { typedef struct { CoreID child; Addr addr; MSI state; Maybe#(CacheLine) data; } CacheMemResp deriving(Eq, Bits, FShow); typedef struct { CoreID child; Addr addr; MSI state; } CacheMemReq deriving(Eq, Bits, FShow); typedef union tagged { CacheMemReq Req; CacheMemResp Resp; } CacheMemMessage deriving(Eq, Bits, FShow); }

CacheMemResp是从子级到父级的降级响应以及从父级到子级的升级响应的类型。第一个字段child是消息传递中涉及的D缓存的ID。CoreID类型在Types.bsv中定义。第三个字段state是子级为降级响应降级到的MSI状态,或子级可以为升级响应升级到的MSI状态。

CacheMemReq是从子级到父级的升级请求和从父级到子级的降级请求的类型。第三个字段state是子级想要为升级请求升级到的MSI状态,或子级应该为降级请求降级到的MSI状态。

消息FIFO的接口也在CacheTypes.bsv中定义:

#![allow(unused)] fn main() { interface MessageFifo#(numeric type n); method Action enq_resp(CacheMemResp d); method Action enq_req(CacheMemReq d); method Bool hasResp; method Bool hasReq; method Bool notEmpty; method CacheMemMessage first; method Action deq; endinterface }

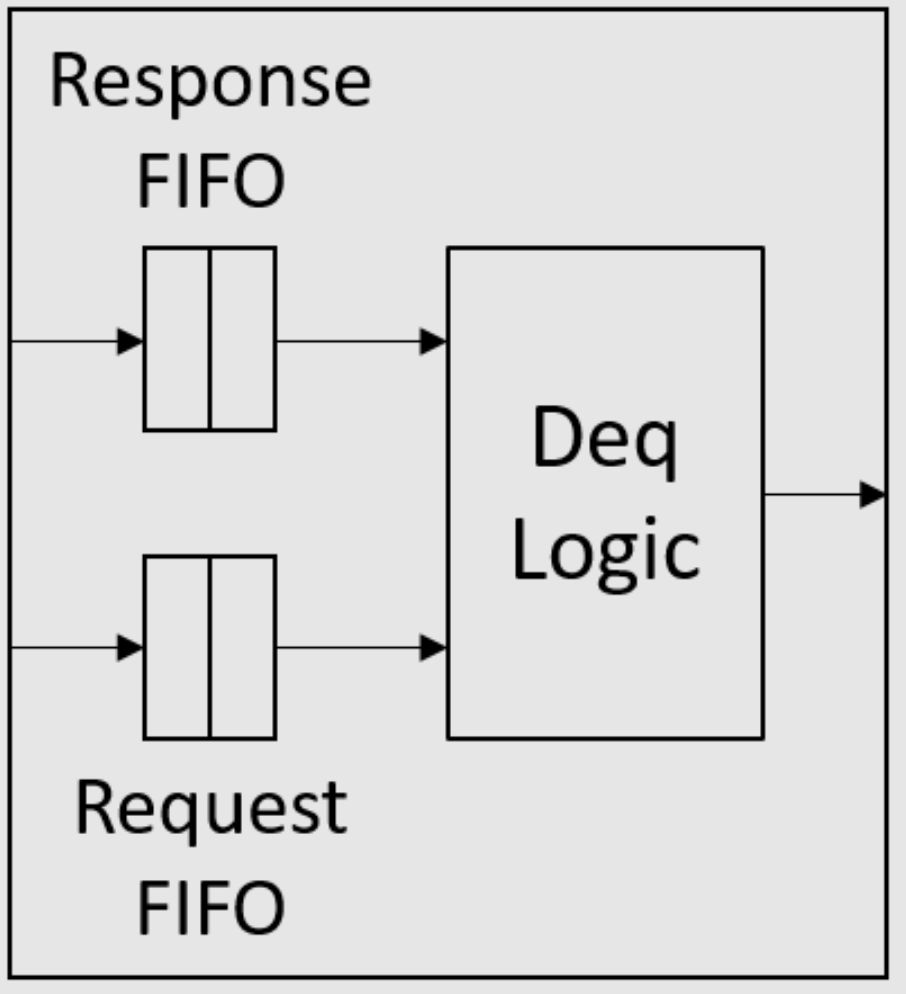

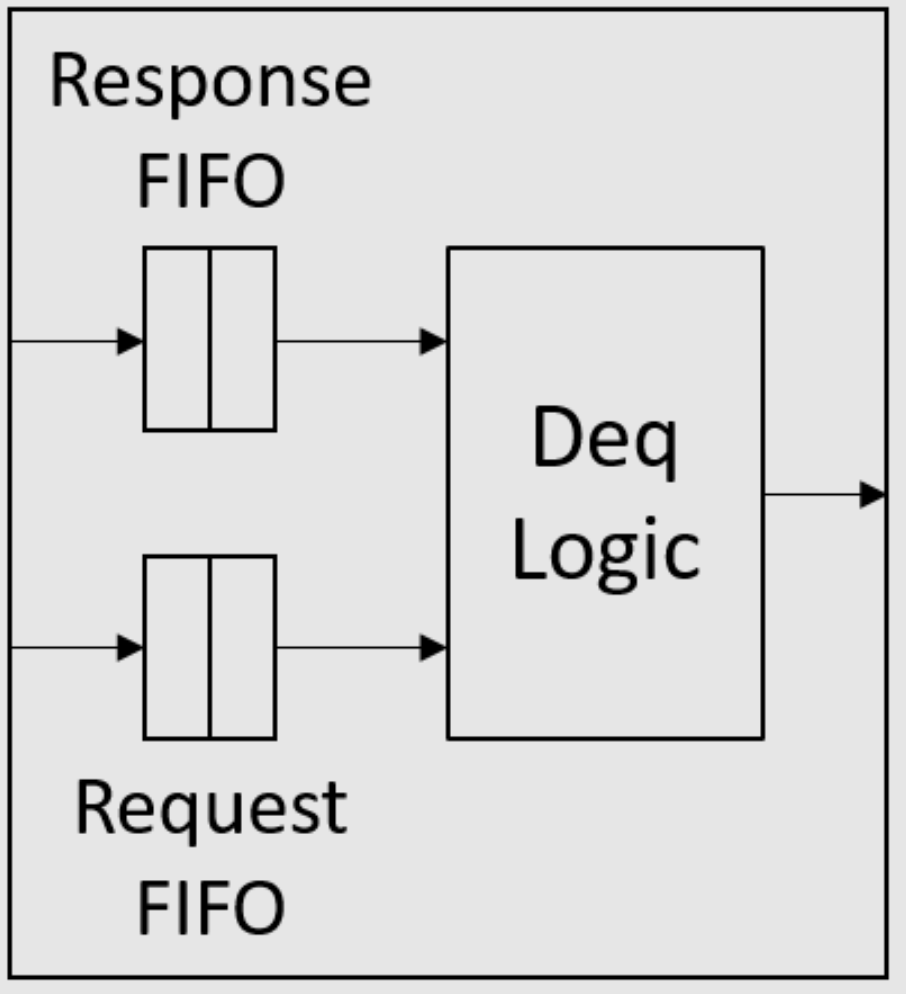

接口有两个入队方法(enq_resp和enq_req),一个用于请求,另一个用于响应。布尔标志hasResp和hasReq分别表示FIFO中是否有任何响应或请求。notEmpty标志只是hasResp和hasReq的或运算。接口只有一个first和一个deq方法,一次检索一条消息。

如课堂上所述,当它们都位于同一个消息FIFO中时,请求永远不应阻止响应。为了确保这一点,我们可以使用两个FIFO实现消息FIFO,如图2所示。在入队端,所有请求都入队到请求FIFO,而所有响应都入队到另一个响应

FIFO。在出队端,响应FIFO优先于请求FIFO,即只要响应FIFO不为空,deq方法就应该出队响应FIFO。接口定义中的数值类型n是响应/请求FIFO的大小。

|

|---|

| 图2:消息FIFO的结构 |

**练习1(10分):**在

src/includes/MessageFifo.bsv中实现消息FIFO(mkMessageFifo模块)。我们在unit_test/message-fifo-test文件夹中提供了一个简单的测试。使用make编译,并使用./simTb运行测试。

消息路由器

消息路由器连接所有L1 D缓存和父协议处理器。我们将在src/includes/MessageRouter.bsv中实现这个模块。它声明为:

module mkMessageRouter(

Vector#(CoreNum, MessageGet) c2r, Vector#(CoreNum, MessagePut) r2c,

MessageGet m2r, MessagePut r2m,

Empty ifc

);

MessageGet和MessagePut接口只是MessageFibo接口的限制视图,它们在CacheTypes.bsv中定义:

interface MessageGet;

method Bool hasResp;

method Bool hasReq;

method Bool notEmpty;

method CacheMemMessage first;

method Action deq;

endinterface

interface MessagePut;

method Action enq_resp(CacheMemResp d);

method Action enq_req(CacheMemReq d);

endinterface

我们提供了toMessageGet和toMessagePut函数,将MessageFifo接口转换为MessageGet和MessagePut接口。以下是每个模块参数的介绍:

c2r是每个L1 D缓存的消息FIFO的接口。r2c是到每个L1 D缓存的消息FIFO的接口。m2r是来自父协议处理器的消息FIFO的接口。r2m是到父协议处理器的消息FIFO的接口。

此模块的主要功能分为两部分:

- 将消息从父级(

m2r)发送到正确的L1 D缓存(r2c), - 将消息从L1 D缓存(

c2r)发送到父级(r2m)。

应该注意的是,响应消息优先于请求消息,就像消息FIFO中的情况一样。

**练习2(10分):**在

src/includes/MessageRouter.bsv中实现mkMessageRouter模块。我们在unit_test/message-router-test文件夹中提供了一个简单的测试。运行以下命令进行编译和运行:$ make $ ./simTb

L1数据缓存

阻塞L1 D缓存(不带存储队列)将在src/includes/DCache.bsv中实现:

module mkDCache#(CoreID id)(MessageGet fromMem, MessagePut toMem, RefDMem refDMem, DCache ifc);

以下是每个模块参数和参数的介绍:

id是核心ID,它将附加到发送到父协议处理器的每条消息上。fromMem是来自父协议处理器的消息FIFO的接口(或更准确地说是消息路由器),因此可以从此接口读出降级请求和升级响应。toMem是到父协议处理器的消息FIFO的接口,因此应将升级请求和降级响应发送到此接口。refDMem用于调试,目前你不需要担心它。

模块返回的`

DCache接口在CacheTypes.bsv`中定义如下:

interface DCache;

method Action req(MemReq r);

method ActionValue#(MemResp) resp;

endinterface

你可能已经注意到MemOp类型(在MemTypes.bsv中定义),它是MemReq结构体(在MemTypes.bsv中定义)的op字段的类型,现在有五个值:Ld, St, Lr, Sc和Fence。现在你只需要处理Ld和St请求。你可以在DCache接口的req方法中添加逻辑,如果检测到除Ld或St之外的请求则报告错误。

MemReq类型还有一个新字段rid,这是用于调试的请求ID。rid是Bit\#(32)类型,对于同一核心的每个请求应该是唯一的。

我们将实现一个16条目直接映射的L1 D缓存(缓存行数定义为CacheTypes.bsv中的类型CacheRows)。我们建议使用寄存器向量来实现缓存数组以分配初始值。我们还在CacheTypes.bsv中提供了一些有用的函数。

MSI状态类型在CacheTypes.bsv中定义:

typedef enum {M, S, I} MSI deriving(Bits, Eq, FShow);

我们使MSI类型成为Ord类型类的一个实例,因此我们可以在它上面应用比较运算符(>, <, >=, <=等)。顺序是M > S > I。

**练习3(10分):**在

src/includes/DCache.bsv中实现mkDCache模块。这应该是一个不带存储队列的阻塞缓存。你可能想使用最终项目第一部分练习1中的变通方法,以避免将来在D缓存集成到处理器流水线时的调度冲突。我们在unit_test/cache-test文件夹中提供了一个简单的测试。要编译和测试,请运行$ make $ ./simTb

父协议处理器

父协议处理器将在src/includes/PPP.bsv中实现:

module mkPPP(MessageGet c2m, MessagePut m2c, WideMem mem, Empty ifc);

以下是每个模块参数的介绍:

c2m是来自L1 D缓存的消息FIFO的接口(实际上来自消息路由器),可以从此接口读出升级请求和降级响应。m2c是到L1 D缓存的消息FIFO的接口(实际上到消息路由器),应将降级请求和升级响应发送到此接口。mem是主内存的接口,我们已经在项目的第一部分中使用过。

在讲座中,父协议处理器中的目录记录了每个可能地址的MSI状态。然而,对于32位地址空间,这将占用大量存储空间。为了减少目录所需的存储量,我们注意到我们只需要跟踪存在于L1 D缓存中的地址。具体来说,我们可以按照以下方式实现目录:

Vector#(CoreNum, Vector#(CacheRows, Reg#(MSI))) childState <- replicateM(replicateM(mkReg(I)));

Vector#(CoreNum, Vector#(CacheRows, Reg#(CacheTag))) childTag <- replicateM(replicateM(mkRegU));

当父协议处理器想要了解核心i上地址a的大致MSI状态时,它可以首先读出tag=childTag[i][getIndex(a)]。如果tag与getTag(a)不匹配,则MS

I状态必须是I。否则,状态应该是childState[i][getIndex(a)]。通过这种方式,我们大大减少了目录所需的存储量,但我们需要在子状态发生任何变化时维护childTag数组。

与讲座中的另一个不同之处在于,主内存数据应使用mem接口访问,而讲座只是假设组合读取数据。

**练习4(10分):**在

src/includes/PPP.bsv中实现mkPPP模块。我们在unit_test/ppp-test文件夹中提供了一个简单的测试。使用make编译,并使用./simTb运行测试。

测试整个存储层次结构

既然我们已经构建了存储系统的每个部分,现在我们将它们放在一起,并使用uint_test/sc-test文件夹中的测试台测试整个存储层次结构。测试将利用mkDCache的"RefDMem refDMem"参数,并且我们需要在mkDCache中添加一些对refDMem方法的调用。refDMem由一个用于一致内存的参考模型(在src/ref/RefSCMem.bsv中的mkRefSCMem)返回,该模型可以基于对refDMem方法的调用检测一致性违规。RefDMem在src/ref/RefTypes.bsv中定义如下:

interface RefDMem;

method Action issue(MemReq req);

method Action commit(MemReq req, Maybe#(CacheLine) line, Maybe#(MemResp) resp);

endinterface

对于mkDCache中的req方法中的每个请求,都应调用issue方法:

method Action req(MemReq r);

refDMem.issue(r);

// 然后处理r

endmethod

这将告诉参考模型发送到D缓存的所有请求的程序顺序。

当请求处理完成时,应调用commit方法,即当Ld请求获得加载结果或St请求写入缓存的数据数组时。以下是commit的每个方法参数的介绍:

-

req是正在提交(即完成处理)的请求。 -

line是req正在访问的缓存行的原始值。这里的缓存行是指具有行地址getLineAddr(req.addr)的64B数据块。因此,它不一定是指D缓存中的行,因为D缓存可能只包含垃圾数据。由于line是原始值,在提交存储请求的情况下,它应该是存储修改之前的值。如果我们知道缓存行数据,

line应设置为tagged Valid。否则,我们将line设置为tagged Invalid。在mkDCache的情况下,当请求提交时,我们总是知道缓存行数据,因为它要么已经在D缓存中,要么在来自父级的升级响应中。因此,line应始终设置为tagged Valid。 -

resp是发送回核心的req的响应。如果有响应发送回核心,则resp应为tagged Valid response;否则应为tagged Invalid。对于Ld请求,resp应为tagged Valid (load result)。对于St请求,resp应为tagged Invalid,因为D缓存从不为St请求发送响应。

当mkDCache调用commit(req, line, resp)方法时,一致内存的参考模型将检查以下事项:

- 是否可以提交

req。如果req尚未发出(即从未为req调用issue方法),或者同一核心的一些较

旧请求尚未提交(即非法重新排序内存请求),则不能提交req。

2. 缓存行值line是否正确。如果line是Invalid,则不执行检查。

3. 响应resp是否正确。

uint_test/sc-test文件夹中的测试台实例化了一个完整的内存系统,并向每个L1 D缓存提供随机请求。它依赖于参考模型来检测内存系统内部的一致性违规。

**练习5(10分):**在

src/includes/DCache.bsv中的mkDCache模块中添加对refDMem方法的调用。然后进入uint_test/sc-test文件夹,使用make编译测试台。这将创建两个仿真二进制文件:simTb_2用于两个D缓存,simTb_4用于四个D缓存。你也可以分别通过make tb_2和make tb_4编译它们。运行测试:

$ ./simTb_2 > dram_2.txt和

$ ./simTb_4 > dram_4.txt

dram_*.txt将包含mkWideMemFromDDR3模块的调试输出,即与主内存的请求和响应。主内存由mem.vmh初始化,这是一个空的VMH文件。这将初始化主内存的每个字节为0xAA。

请求发送到D缓存i的跟踪可以在driver_<i>_trace.out中找到。

测试程序

我们可以使用以下命令编译测试程序:

$ cd programs/assembly

$ make

$ cd ../benchmarks

$ make

$ cd ../mc_bench

$ make

$ make -f Makefile.tso

programs/assembly 和 programs/benchmarks 包含单核心的汇编和基准测试程序。在这些程序中,只有核心0会执行程序,而核心1将在启动后不久进入 while(1) 循环。

programs/mc_bench 包含多核基准测试程序。在这些程序的主函数中,首先获取核心ID(即 mhartid CSR),然后根据核心ID跳转到不同的函数。一些程序只使用普通的加载和存储,而其他程序则使用原子指令(加载保留和条件存储)。